Introduction

In this collaborative research study, teaching experiments were carried out in Year 9 classes of predominantly Pasifika students. There were three phases. During the planning phase the research team planned activities and envisioned how dialogue and statistical activity would unfold as a result of the classroom activities. Data were collected during the teaching phase, and then the data were analysed using a grounded theory approach. The findings have implications for the teaching of statistical literacy.

Key findings

- All students can develop critical thinking skills when teachers show them how to question statistical

reports and conclusions. - Students can be taught how to question and challenge data in respectful ways.

- Context and literacy skills place huge demands on students, but teachers can help develop the skills and

dispositions to experience success.

Major implications

- Teachers need to focus on classroom activities that develop critical thinking skills rather than statistical procedures and skills.

- To promote statistical argument and discussion, teachers need to create a classroom climate that positively engages all students.

- Teachers should provide opportunities for students to work with real data. Activities should move from familiar to unfamiliar contexts.

- To cater for individual needs, teachers need to be able to recognise how to progress students through the stages of statistical literacy.

The research

Background

Gal (2004) sees statistical literacy as students being able to interpret results from studies and reports and to “pose

critical questions” about those reports. He argues that since most students are more likely to be consumers of data than researchers are, classroom instruction needs to focus on interpreting data rather than collecting data. Aspects of Gal’s notion of statistical literacy have been incorporated in The New Zealand Curriculum, which states, “Statistics also involves interpreting statistical information, evaluating data based arguments, and dealing with uncertainty and variation” (Ministry of Education, 2007, p. 26). Although the term “critical” does not appear in the statistics achievement objectives, it is embedded in the thinking and using language symbols and text key competencies of the curriculum.

The application of this extended notion of statistical literacy is likely to pose a challenge for teachers. In part this may be due to the debate and discussion among educationalists and curriculum developers about the nature of statistics and mathematics and best practice for instruction in each domain (Begg et al., 2004; Rossman, Chance, & Medina, 2006; Shaughnessy , 2007). Statistics educators argue that mathematics strips the context in order to study the abstract structure and generalise, whereas in statistics context is crucial for analysing data. They point out that students need multiple opportunities to relate their comments to a context when drawing conclusions. Given this complexity, it seems likely that it will be challenging for teachers to unpack what is meant by statistics and to understand the implications of statistical thinking, probability and statistical literacy for teaching and learning in their classrooms.

Research questions

The following inter-related research questions guided this study:

- How can we support students to develop statistical literacy within a data evaluation environment?

- How can we develop a classroom culture in which students learn to make and support statistical arguments based on data in response to a question of interest to them?

- What learning activities and tools can be used in the classroom to develop students’ statistical critical thinking skills?

Methodology

The research was carried out in a strongly Pasifika environment. Pasifika students have been identified as the most at-risk group in New Zealand in terms of academic achievement when compared to other New Zealanders (Ministry of Education, 2005). Anthony and Walshaw (2007) raise the concern that there is little reported research that focuses on quality teaching for Pasifika students. According to Alton-Lee (2008), one of the biggest gaps in research and development is what works for Pasifika students at the secondary school level. We believe that the approach taken here was appropriate for a strongly Pasifika classroom.

This approach informed the research project on many levels. Literacy and contextual knowledge were given a greater focus because of the needs of the students. Critical questioning and developing appropriate classroom norms were also seen as particularly important in this research context. However, some of the recommendations may well apply to all students, such as developing contextual knowledge by moving from familiar to unfamiliar contexts.

The teaching experiment had three phases: preparation, classroom teaching, and debriefing and analysis of the teaching episodes. In the preparation phase the research team (teachers and researcher) proposed a sequence

of ideas, skills, knowledge and attitudes they hoped students would construct as they participated in activities. The team planned activities to help move students towards the desired learning goals. As part of the activities, students evaluated statistical investigations or activities undertaken by others, including data collection methods, choice of measures and validity of findings. The team envisioned how dialogue and statistical activity would unfold as a result of planned classroom activities.

The teaching took place in regular-timetable mathematics classes. There were two cycles of teaching experiments spread over up to 4 weeks each year. Students’ thinking and understanding were given a central place in the design and implementation of teaching, consistent with Alton-Lee, 2008. The data set consisted of video recordings of classroom sessions, copies of the students’ written work, audio recordings of teacher meetings and interviews conducted with students, and field notes of the classroom sessions. Semi-structured interviews were also conducted with a selected number of students (in groups of two or three) from each class while the experiment was in progress. The team engaged in conscious reflection and evaluation of situations as they unfolded.

The research team read the transcripts, watched the videotapes, and formulated conjectures on the learning sequences and students’ learning. These conjectures were tested against other episodes and the rest of the collected data. Coding of the written responses and interview data was undertaken in three stages. First, the team coded the responses independently, based on the developmental hierarchies used in the research literature (Watson, 2006). Level descriptors were then revised, based on newly identified descriptions of features, and the hierarchy was adapted by developing new levels based on responses most suitably accommodated. Finally, the responses were recoded independently by both the researcher and teachers, based on the new hierarchy. Further discussion was used to resolve disputed categories.

Findings

Our findings show that almost all Year 9 students were able to accurately extract information from statistical graphs or simple text. This is consistent with a range of findings on statistical and graphical interpretation at Year 8 (Crooks, Smith, & Flockton, 2009; Smith et al, 2007). Interestingly, Smith et al. also found little difference between the performance of Pasifika students and students of other ethnicities when interpreting data from graphs.

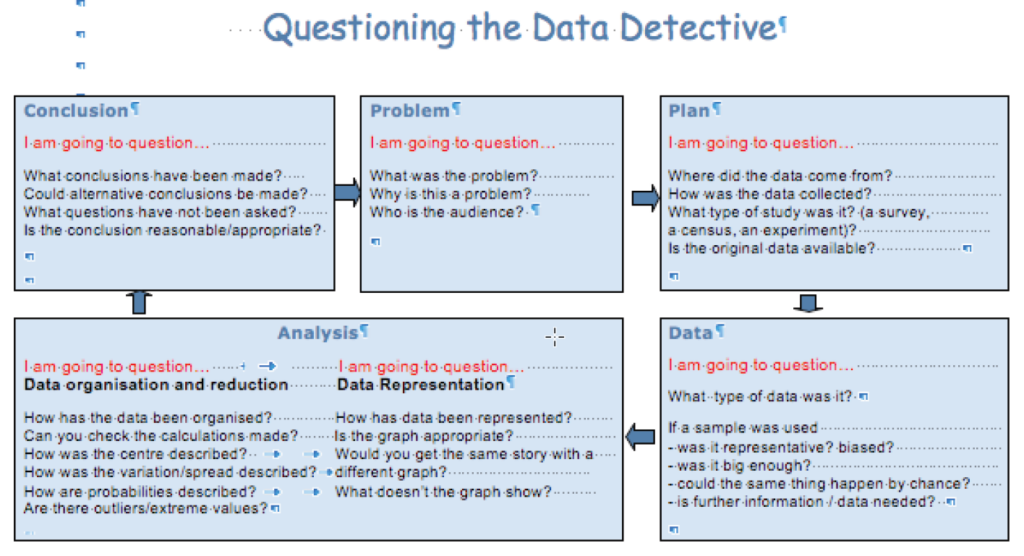

However, statistical literacy is more than the ability to do calculations and read tables and graphs. Students were asked to go beyond this to ask questions of data and statistical reports. Few, if any, students could initially ask a question of the data such as “How or when was the data collected?” or “Who and how many were asked or not asked?” However, with suitable support, students were able to interpret and critically evaluate statistical information and data-related arguments. They were also able to discuss and communicate their understanding and opinions to others. This was achieved in part by providing thinking and questioning routines, such as the Questioning the Data Detective poster (Figure 1), which is modified from the PPDAC (problem, plan, data, analysis, conclusion) poster already seen in many New Zealand classrooms. It was also achieved by providing scaffolding for the literacy and contextual knowledge demands of tasks.

Figure 1: Questioning the Data Detective

We noticed that literacy skills are critical to the development of statistical literacy. Students were required to communicate their opinions clearly, both orally and in writing. Students in the class had different language abilities, which meant they needed to interact in order to improve the group’s statistical communication. This presented various demands on students’ literacy skills. Most of our classroom activities included small-group and whole-class discussion of the data. The two teachers took time to remind the students how to work in groups (e.g., how to agree and disagree and how to present to the class). Our results show that students can be taught how to question and challenge in respectful ways as part of classroom discourse.

Context is an important part of statistical literacy. Our findings show that students need exposure to both familiar and unfamiliar contexts. Context helps students to develop higher-order thinking skills. However, we also found that contextual knowledge can be a barrier for some students. Teachers addressed this in two ways: by starting with familiar contexts before moving to unfamiliar contexts, and by using contexts of interest to the students. This involved handing over some of the control and planning of lessons to the students.

The statistical literacy framework used in this study (based on Watson, 2006) can enable teachers to trace students’ development in statistical literacy during teaching. The framework shows the type of statistical literacy that can be expected at different levels. The four stages are:

- stage 0–1: informal/idiosyncratic

- stage 2: consistent non-critical

- stage 3: early critical

- stage 4: advanced critical

Teachers were able to assess students at the different stages of statistical literacy. Our findings showed that although students were at different stages, it was possible to progress them to the next stages of statistical literacy when teachers recognised the level that students were thinking at and then responded with appropriate support.

Major implications

The findings of this study offer insights that may help the development of more effective and appropriate practices for developing critical statistical literacy. Statistical literacy is more than the ability to do calculations and read tables and graphs. Students should be able to interpret and critically evaluate statistical information and data-related arguments. This has consequences for how the teaching of statistical literacy might be made more effective. For example, ample class time should be spent on discussion and reflection rather than presentation of information.

Literacy knowledge and skills are important aspects in the development of statistical literacy. Statistical messages can be conveyed through both written and oral forms, and in a range of text types (text, graphs, tables). Statistics also has a particular language, and some statistical terms create problems because they are familiar from everyday discourse but take on a different meaning in statistics. Also, students are required to communicate their findings and opinions clearly (orally or in writing) so that others can judge the validity of their arguments. Therefore, an understanding of statistical messages requires the activation of a wide range of literacy skills.

Teachers need to be able to support students to deal with the literacy demands of statistical information. This can be done by using specific literacy strategies, such as pre-reading and vocabulary strategies and writing frames, and prompts can be used to promote reading and writing in statistics. Context also plays a key role in the development of statistical literacy, and so students need exposure to both familiar and unfamiliar contexts. Teachers need to provide opportunities for students to work with real data and should choose contexts that suit the needs of their students.

The nature of the learning environment and classroom culture are major contributors to success for students, and teachers need to put a high priority on building a classroom climate that positively engages all students. Students need to understand the importance of sharing their opinions in order to advance their statistical ideas, and teachers should help students to reflect on the purpose of explaining and justifying their thinking to others. This is consistent with the New Zealand curriculum, which promotes the ideals of having confident, critical and active learners of mathematics (Ministry of Education, 2007).

Over time the classes developed lists of common critical or worry questions that may be helpful when evaluating a statistics-based report. From these lists of critical questions the research team developed the Questioning the Data Detective poster, to help students evaluate statistical reports and articles. This poster and other such critical thinking routines may prove useful resources for teachers working with interpreting the statistical literacy achievement objectives of the New Zealand curriculum. We recommend that these types of questions or critical thinking routines be introduced in schools because there is a need for students to begin to question statistical reports at an early age.

References

Alton-Lee, A. (2008). Research needs in the school sector. An interview with Dr Adrienne Alton-Lee, February 2008.

Anthony, A., & Walshaw, M. (2007). Effective pedagogy in mathematics/pāngarau: Best evidence synthesis iteration (BES). Wellington: Ministry of Education.

Begg, A., Pfannkuch, M., Camden, M., Hughes, P., Noble, A., & Wild, C. (2004). The school statistics curriculum: Statistics and probability education literature review. Auckland: Auckland Uniservices, University of Auckland.

Crooks, T., Smith, J., & Flockton, L. (2009). Graphs, tables and maps: Mathematics assessment results 2009: National education monitoring report 52. Wellington: Ministry of Education.

Gal, I. (2004). Statistical literacy: Meanings, components, responsibilities. In J. Garfield & D. Ben-Zvi (Eds.), The challenge of developing statistical literacy, reasoning and thinking (pp. 47–78). Dordrecht, The Netherlands: Kluwer.

Ministry of Education. (2005). Learning for tomorrow’s world: Programme for international student assessment (PISA) 2003: New Zealand summary report. Wellington: Author.

Ministry of Education. (2007). The New Zealand curriculum. Wellington: Learning Media.

Rossman, A., Chance, B., & Medina, E. (2006). Some important comparisons between statistics and mathematics, and why teachers should care. In G. Burrill & P. C. Elliot (Eds.), Thinking and reasoning with data and chance (pp.323–333). Reston, VA: National Council of Teachers of Mathematics.

Shaughnessy, J. M. (2007). Research on statistics learning and reasoning. In F. K. Lester, Jr (Ed.), Second handbook of research on mathematics teaching and learning (pp. 957–1009). Reston, VA: National Council of Teachers of Mathematics.

Smith, J., Crooks, T., Flockton, L., & White, J. (2007). Graphs, tables and maps: Mathematics assessment results 2007: National education monitoring report 46. Wellington: Ministry of Education.

Watson, J. M. (2006). Statistical literacy at school: Growth and goals. Mahwah, NJ: Lawrence Erlbaum.