Introduction

The “summer learning effect” is where students’ school literacy achievement plateaus or declines over summer. This limits students’ levels of achievement over time which can create a barrier to decile 1 schools’ effectiveness. It is a well known effect both within New Zealand and worldwide, but there is limited research evidence on how to overcome it. Our aim was to identify factors that may help overcome the summer learning effect in reading in decile 1 schools in New Zealand.

Key findings

The summer learning effect was confirmed as a major barrier to ongoing achievement: the gains made over the school year disappeared.

Specific preparation by teachers for students and guidance for their parents were associated with a lower summer learning effect.

When implemented fully, a three-component intervention designed from local evidence was associated with essentially no summer learning effect.

Major implications

Without specific interventions, gains made during the school year in decile 1 schools may be lost.

Context-specific solutions are based on student, family and school practices relating to reading over summer, and having resources available to students and families.

Interventions can be designed which can improve school-related learning over summer for Māori and Pasifika students in decile 1 schools.

A successful intervention includes matching students to texts, specific teaching of strategies and metacognitive skills for summer reading, specific messages for parents, and following up.

The research

Background

The “summer learning effect” (Sle) has been observed and described in schools internationally (Borman, 2005). Typically, students from poorer communities and minority students make less growth than other students over summer, which contributes to a widening gap in achievement and an achievement barrier that gets larger over time (Alexander, Entwisle, & Olson, 2007). The summer learning effect also has been identified in New Zealand schools and their communities. Three studies in schools serving mainly Māori and Pasifika students in communities of low socioeconomic status confirmed the extent of the effect (McNaughton, Lai, & Hsiao, in press).

Explanations for the summer learning effect include a lack of access to texts at home (Allington et al., 2010), levels of engagement with texts, and rates of reading with family members (Kim, 2006). considerable variation in the pattern of the summer learning effect in the schools serving Māori and Pasifika communities has been found among students within classrooms, between classrooms within schools, and between schools (Lai, McNaughton, Amituanai-Toloa, Turner, & Hsiao, 2009; Lai, McNaughton, Timperley, & Hsiao, 2009). For this reason, any attempt to overcome the summer learning effect should be contextualised, capitalising on practices already present.

Research questions

The research question was: What are the school and family practices that, singly and together, ameliorate the “summer learning effect”?

The first phase of the study aimed to describe, firstly, the extent and variability of the summer learning effect and, secondly, the associated school, family and student practices in decile 1 schools. Using those descriptions, the second phase of the study then aimed to design and test an intervention using features of school and family practices associated with little summer learning effect.

Phase 1

Methodology and analysis

Phase 1 was designed to create a profile of practices associated with the summer learning effect in decile 1 schools.

Seven schools in South Auckland participated in the research. The students at these schools were mostly from Māori and Pasifika families in communities of low socioeconomic status. an ongoing collaboration with these schools identified the summer learning effect as a major barrier to making higher achievement gains. We first examined STAR results, and found that while students made gains over 2009, there was a marked summer learning effect over the 2009–2010 summer break, with much lower scores at the start of 2010.

Using students’ results from STAR before and after the 2009–2010 summer break, we identified 8 high and 8 low “summer learning effect” classes of year 4 to 8 students. Within each class we selected one student with a high summer learning effect and one with a low summer learning effect.

The teachers of these classes and the selected students and their parents were interviewed at the start of 2010 about what happened before and during summer. Teacher interviews asked about teaching practices possibly associated with the summer learning effect, including preparatory and follow-up activities with students and their families in four areas: child strategies, child metacognition, child engagement and parental guidance. Interviews with students and families mirrored these and asked about personal and family practices over summer and the resources available to them.

Teacher interviews were analysed to distinguish whether responses to interview questions varied on the basis of class summer learning effect—that is, whether there were practices that distinguished a teacher of a class with high summer learning effects from a teacher of a class with low summer learning effects. As there was one student with a high and one with a low summer learning effect within each high and low SLE class, students’ and their parents’ responses were analysed to examine the features associated with both students and classes with high and low summer learning effects.

Results

When asked what they did, teacher responses were similar, so it was difficult to distinguish classroom practices associated with the summer learning effect. However, teachers of classes with a low summer learning effect were more likely to encourage their students to use the library over the summer break, to provide guidance to individual students, and to help their students choose suitable books, while, surprisingly, teachers of classes with a high summer learning effect were more likely to provide specific books for parents.

Teacher responses were then categorised, according to how they framed their instruction, as either general practices, assumed to carry over to summer (e.g., “i always tell them to go to the library”), or specific, with a focus on the summer break. These practices were grouped across four dimensions: student reading strategies or reading competence, student metacognition or awareness, student engagement or actual practice, and information sharing with parents. Teachers in classes with a low summer learning effect more often reported preparing students specifically than generally across the four dimensions.

Student responses did not reveal many differences between high and low SLE students or classes. Reading variety reduced over summer for both students with a high and those with a low summer learning effect. however, all of the students from classes with a low summer learning effect reported that their teachers had given them ideas about reading over summer. Moreover, all of the students with a low summer learning effect, and all of the students from classes with a low summer learning effect, reported that someone at home helped them read. In contrast, the parents of high SLE students reported that access to texts influenced their child’s summer reading choices. overall, responses indicated that low SLE students were supported to access and read engaging, high-interest texts.

Phase 2

Methodology and analysis

Phase 2 was designed to use the findings from Phase 1 to develop an intervention designed to overcome the summer learning effect. The focus of the intervention was on students in years 4 to 6 in 2010 (n = 648, in five of the schools). The intervention programme had three major components.

- Teacher preparation (including personalising access) before summer. in the last four weeks of the school year, the teacher focused on the “preparation, promotion and practice” of students for summer. The teachers designed a topic-based study within which access to texts based on personalised matching occurred. Preparing students involved administering a student survey of their reading interests in genre, topics and media.

- Parent guidance. The second component was for teachers to provide specific guidance to parents on how to support engagement over summer. The results of the student survey and the conferencing over the four-week unit were conveyed in key messages for parents on how parents could help, and why these key messages were important.

- Student review. The third component was a review session at the start of the new school year in February 2011. Students completed a review at the end of the summer to provide self-report measures of student and family reading practices over summer.

The process was conducted collaboratively by teachers and the research team. The intervention was planned as a collaboration, and feedback was sought from teachers after the conclusion of the intervention.

Analyses focused on a comparison between the previous summer (2009–2010) with the summer after the intervention (2010–2011) to see whether the intervention had reduced the summer learning effect. Further analyses examined the levels of implementation using a teacher focus survey, and the relationship between the summer learning effect and the student survey and student review.

Results

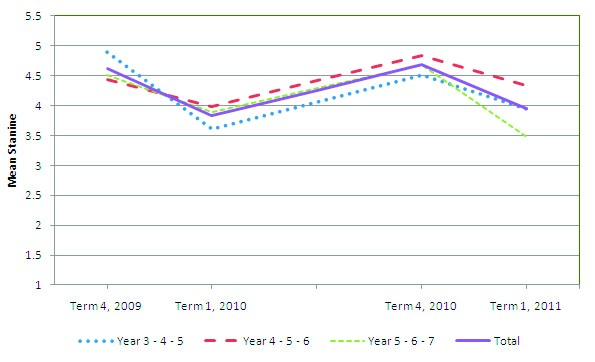

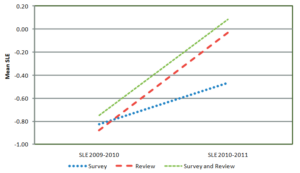

Overall, there was no difference statistically between the 2009–2010 and 2010–2011 summer learning effect, although there was some variation between cohorts (Figure 1).

Teacher focus surveys were examined. From the five schools which had classes with Year 4 to 6 students, a total of 29 teachers responded (out of a possible 46). The ratings in these, together with the low return rate, indicated that the intervention programme was not fully implemented. Feedback from schools in later meetings indicated that more time and preparation would have been helpful to allow them to include the programme more fully in their planning.

| Figure 1. Mean stanines by cohort from 2009–2011. |

|

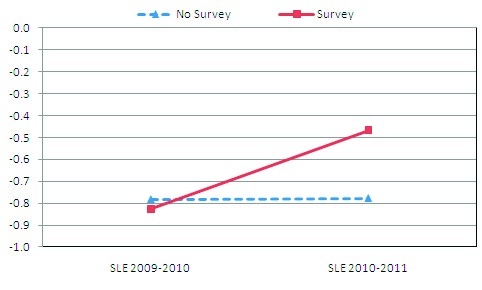

Approximately 15% of students (92 out of a possible 648 students, from three of the five schools) completed the student survey before summer, corroborating the low implementation rate. This was originally designed to help teachers plan the programme around students’ interests. While we found no particular relationship between individual responses in the survey and the summer learning effect, the comparison between students who completed the survey and those who did not was important. Students who did not complete the survey had a similar summer learning effect before and after the intervention, while students who did complete the survey had a big reduction in their summer learning effect (Figure 2). This suggests something about the classes whose teachers asked their students to complete the survey. These classes and their teachers may have practices that relate to a reduced summer learning effect.

| Figure 2. Mean summer learning effect in 2009–2010 and 2010–2011 for students who did and did not complete the survey. |

|

Even more positive results were found for the student review after summer. again, the percentage of students who completed the review indicated low implementation (23% or 148 students, from two schools). Students who completed the review had a large reduction in their summer learning effect, while those who did not had a slight increase in their summer learning effect. This lowering of the summer learning effect was also true for students who were in classes that completed the review, even if they themselves did not complete it (e.g., if they were absent that day).

This result was surprising considering that the review occurred after summer, so completing the review cannot have directly affected summer reading. This indicates that something about the students’ new classes had led to higher achievement even at such an early time in the year (within four weeks after starting school).

| Figure 3. Mean summer learning effect in 2009–2010 and 2010–2011 for students who did and did not complete the review. |

|

The combined effects of the survey and review indicated that those who completed the review tended to have a greater reduction in the summer learning effect than those who completed the survey.

| Figure 4. Mean summer learning effect in 2009–2010 and 2010–2011 for students who did and did not complete the review, survey and both. |

|

Major implications

IMPLICATION 1: Find out what children like to read and engage them in reading motivating texts

The findings suggest that students’ motivation for reading over summer was primarily enjoyment. Moreover, support for students’ reading for enjoyment was apparent in low SLE teachers’ responses, where practices offered support for developing children’s interests and strategies for choosing texts. The intervention results also showed the positive effects of the student review. combined, the evidence suggests that there are sound reasons for believing that supporting children’s reading interests should have positive effects on students’ reading, and there is also empirical evidence that finding out about these helps reduce the summer learning effect.

IMPLICATION 2: Mentor students to develop those aspects of their literacy which are to do with engagement, their development of “taste” and informational interests. Teach them to access these texts and to monitor their enjoyment.

Our results show that engagement in reading over summer relies on students selecting and enjoying texts as part of leisure activities. Thus, whereas metacognitive prompts and goal setting based on schooling success may achieve higher outcomes within a year, teaching that is specific to reading over summer may need to develop metacognitive prompts that monitor enjoyment. This may mean developing students’ strategies to enhance their own enjoyment of texts; for example, awareness of what types of texts they like, where to get similar genre texts, and identifying what they like and do not like in a text. Students also need to develop skills for selecting appropriate texts, both in terms of finding these texts (for example, at the library or online) and in terms of making choices that will engage them.

IMPLICATION 3: Give specific messages to parents about how to support children’s engagement with text.

Guidance and support from schools seems to be a powerful ingredient, both at the level of the child, and also the family. Support for students seems most powerful when specific to summer. Support for parents may also benefit from specificity. in our study, parents of students with a low summer learning effect were able to describe specific practices that they use to support their children. coupled with a focus on engagement, this suggests that general messages to “carry on” with reading over summer are less effective than specific messages that focus on how to support children’s interests and enjoyment of reading. Encourage parents to discuss with their child what they are reading, and to focus on interest and enjoyment over summer rather than success at school. Give specific advice on how to support students’ access to motivating and interesting texts.

IMPLICATION 4: Find out about students’ summer reading at the beginning of the year.

While rates of implementation of the intervention were low, those who did complete the intervention (as measured by completion of the student survey and/or review) had a large reduction in the summer learning effect, to the extent that, on average, the summer learning effect disappeared entirely. Moreover, the reduction in the summer learning effect for the students completing the review, which was originally only intended as a check to see the effect of the intervention, was almost twice that of students completing the survey before summer, and is an unexpected and somewhat curious finding. One possible explanation is that the review of summer reading allowed teachers to get to know their learners, specifically as it related to their reading interests and out-of-school reading practices. or, it may be that the review of summer reading increased the teachers’ and the students’ focus on reading at the beginning of the school year. Both of these are important ingredients of getting learners off to a fast start.

References

Alexander, K. L., Entwisle, D. R., & Olson, L. S. (2007). Lasting consequences of the summer learning gap. American Sociological Review, 72, 167–180.

Allington, R. L., McGill-Franzen, A. M., Camilli, G., Williams, L., Graff, J., Zeig, J., et al. (2007, April). Ameliorating summer reading setback among economically disadvantaged elementary students. Paper presented at the American Educational Research Association Annual Meeting, Chicago, Il.

Borman, G. D. (2005). National efforts to bring reform to scale in high-poverty schools: Outcomes and implications. In L. Parker (ed.), Review of Research in Education, 29 (pp. 1–28). Washington, DC: American Educational Research Association.

Kim, J. S. (2006). Effects of a voluntary summer reading intervention on reading achievement: Results from a randomized field trial. Educational Evaluation and Policy Analysis, 28(4), 335–355. Lai, M. K., McNaughton, S., Amituanai-Toloa, M., Turner, R., & Hsiao, S. (2009). Sustained acceleration of achievement in reading comprehension: The New Zealand experience. Reading Research Quarterly, 44(1), 30–56.

Lai, M. K., McNaughton, S., Timperley, H., & Hsiao, S. (2009). Sustaining continued acceleration in reading comprehension achievement following an intervention. Educational Assessment, Evaluation and Accountability, 21(1), 81–100.