Executive Summary

The schools of South Auckland which have high proportions of Māori and Pasifika students have long been described by researchers as sites for low achievement, particularly in literacy (e.g., Ramsay, Sneddon, Grenfell & Ford, 1981). However, recent evidence suggests that the disparities between Māori and Pasifika students and other students in reading accuracy have been reduced, and that there has been a substantial reduction in the proportions of students in the lowest bands of achievement. Despite this, the evidence also suggests that at Year 4 and Year 9, the disparities in reading comprehension have continued, if not increased (Crooks & Flockton, 2005).

A research and development programme, conducted as a collaborative partnership between researchers, schools and the Ministry of Education, was designed to test several questions about achievement in seven decile 1 schools in South Auckland. These questions were:

- Can a research-practice collaboration develop cluster-wide and school based professional learning communities that are able to critically analyse and problem solve issues of instructional effectiveness, thereby developing more effective instruction that has a powerful educationally significant impact on Māori and Pasifika children’s comprehension at Years 4–9 in decile 1 schools?

- Can a set of effective instructional activities be identified that are able to be used by teachers to enhance the teaching of comprehension for Māori and Pasifika children in Years 4–9 in decile 1 schools?

In addition, there was a specific question about Samoan students and achievement in Samoan bilingual classrooms:

- Can the research and development programme contribute to more effective instruction for Samoan students in Samoan bilingual classes?

These questions were based on a set of hypotheses about the nature of effective instruction for reading comprehension, and the nature of effective school-based interventions. There were two main hypotheses: first, that more effective teaching could be developed through a professional learning community that has a continuing process of critical discussion and problem solving, based on evidence (Robinson & Lai, 2006); and secondly, that effective instruction would include a range of attributes, such as explicit teaching of strategies, and deliberate teaching of vocabulary (Pressley, 2002), but that these would need to be contextualized to the specific needs created by past histories of schooling and contemporary profiles.

The research and development programme was conducted over three years with up to 70 teachers and, in different years, between 1200 and 1900 students, over 90 percent of whom were Pasifika or Māori students. In the six Samoan bilingual classes from two schools, there were between 140 and 169 students across different years. A quasi-experimental design was employed to examine relationships between the programme and the outcomes over three years. The robustness of the design was enhanced by features such as a comparison with an untreated cluster of similar schools, and checks on subject attrition. Repeated measures of student achievement at the beginning and the end of each year, and a final measure at the beginning of the fourth year, form the basis of the design which, among other things, examines rates of gain against predicted patterns of growth generated from a baseline.

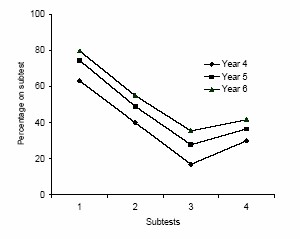

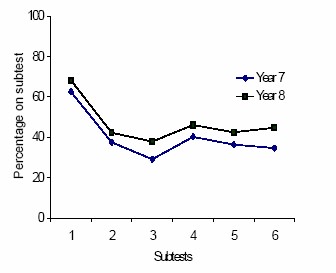

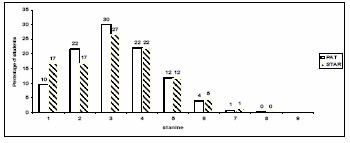

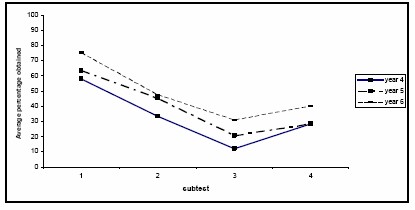

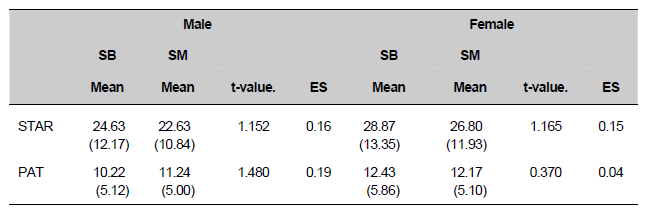

An initial step involved collecting baseline ‘profiles’ of achievement, using the standardised assessments of reading comprehension from PAT ( Reid & Elley, 1991), and of a range of aspects of reading comprehension, including decoding provided by STAR (Elley, 2001). It also involved collecting baseline profiles of classroom instruction, using systematic observations in classrooms. Together these baselines provided detailed evidence about strengths and weaknesses in the students’ reading comprehension, which were able to be mapped on to patterns of instruction in the classroom. For example, it showed that low decoding levels were generally not a problem; rather, it was patterns of checking and detecting threats to meaning in paragraph comprehension, and size and knowledge of vocabulary, that were posing difficulties. An unpredicted finding was that while high rates of explicit strategy instruction occurred, students were focused on the strategies as ends in themselves, and often resorted to guessing. Classroom observations showed a low incidence of teachers or students monitoring and checking strategies, and low rates of identifying and elaborating meanings of low frequency words, unusual uses of common words, or idiomatic uses.

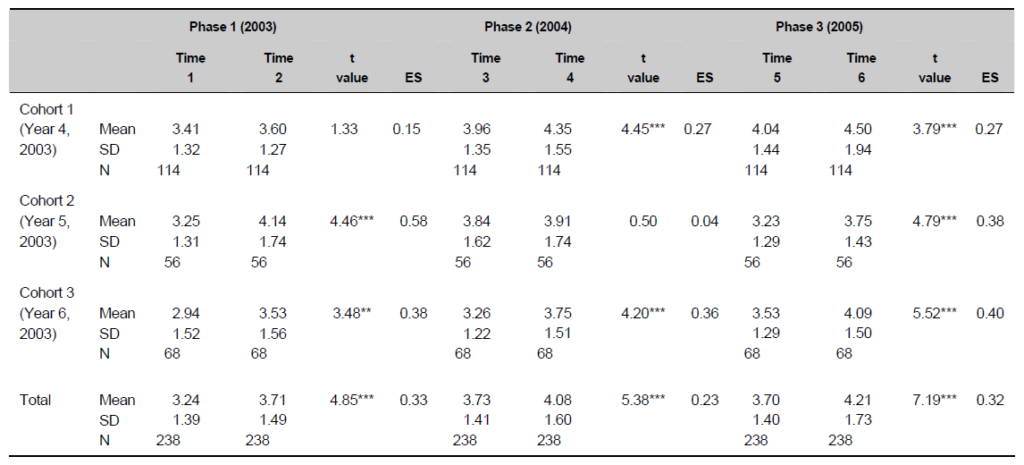

The first phase included systematic feedback and analysis and problem solving at cluster, school and classroom levels, using the profiles as evidence. This process occurred each year thereafter. A second phase added targeted professional development, based on the evidence in the first phase, with all the Year 4–9 teachers. The third phase involved planned sustainability of the professional learning communities, with teacher designed projects and a cluster led conference.

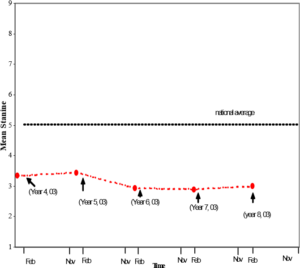

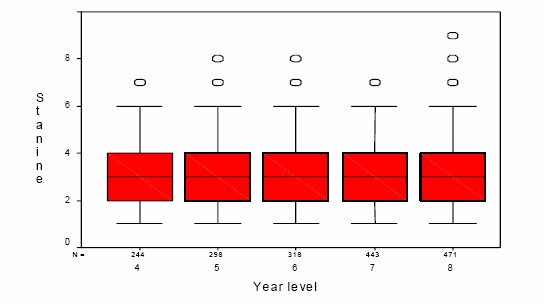

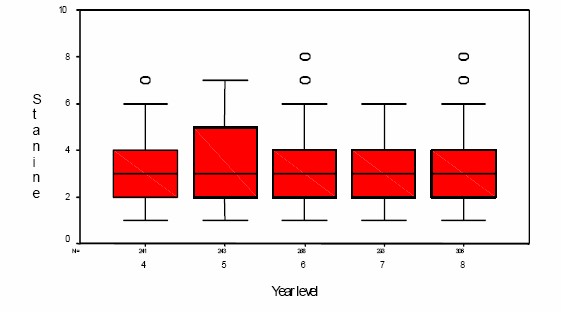

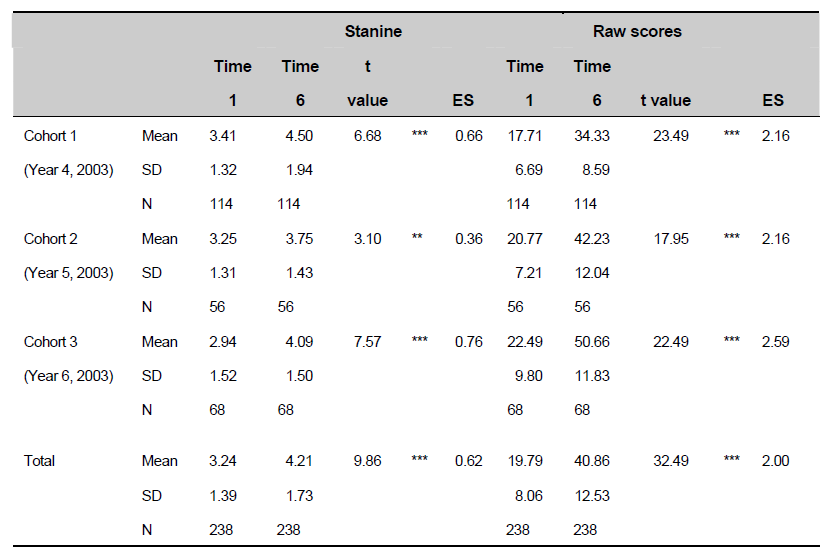

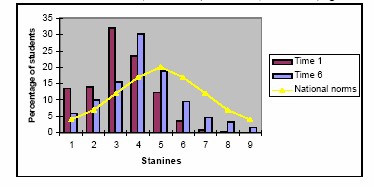

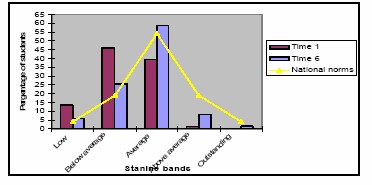

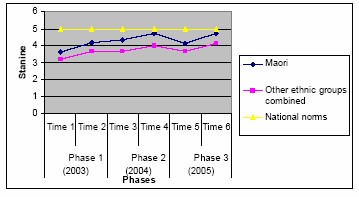

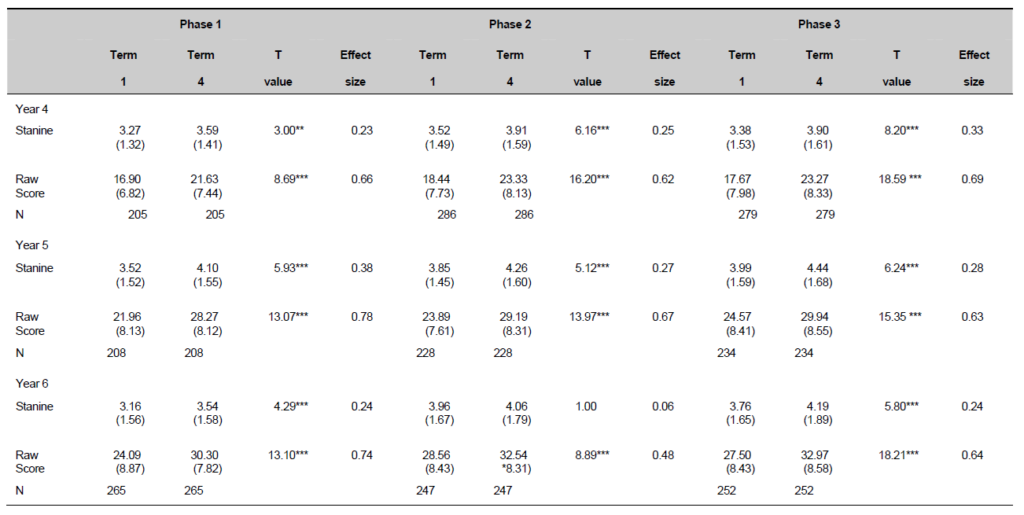

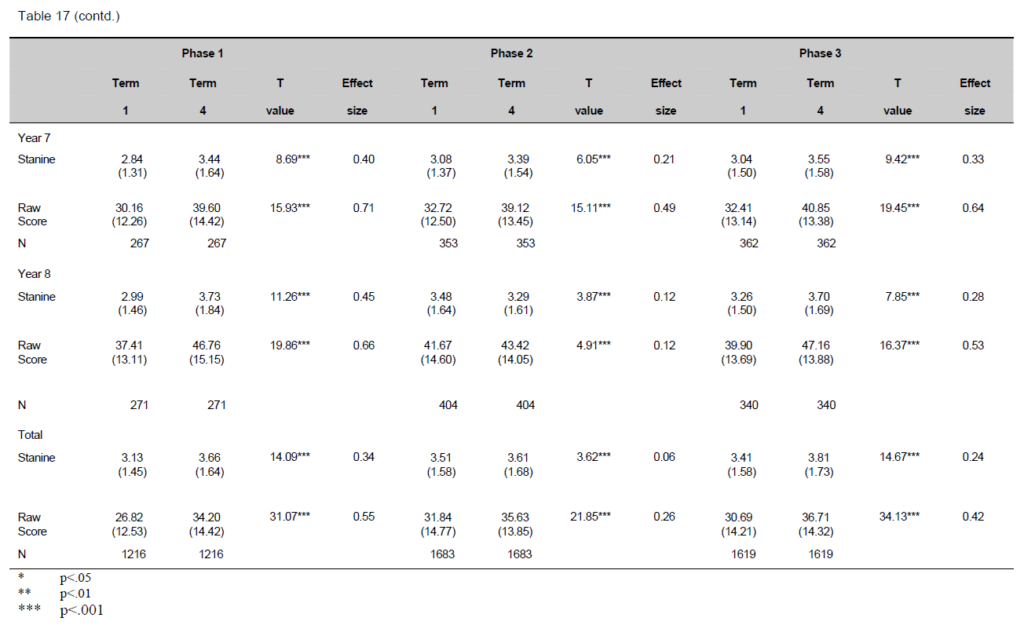

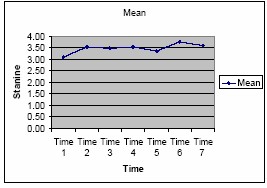

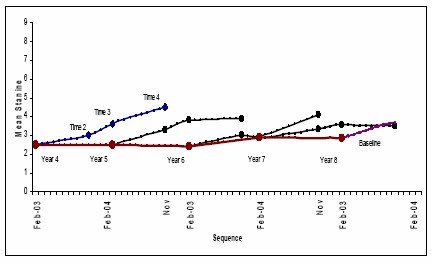

At baseline, students were on average at stanine 3.1, approximately two years below expected levels, and this was generally the case, with some variation across year levels and across schools. To test the impact of the programme, a number of different analyses were made using longitudinal cohorts, comparisons with baseline projections, and total school population changes.

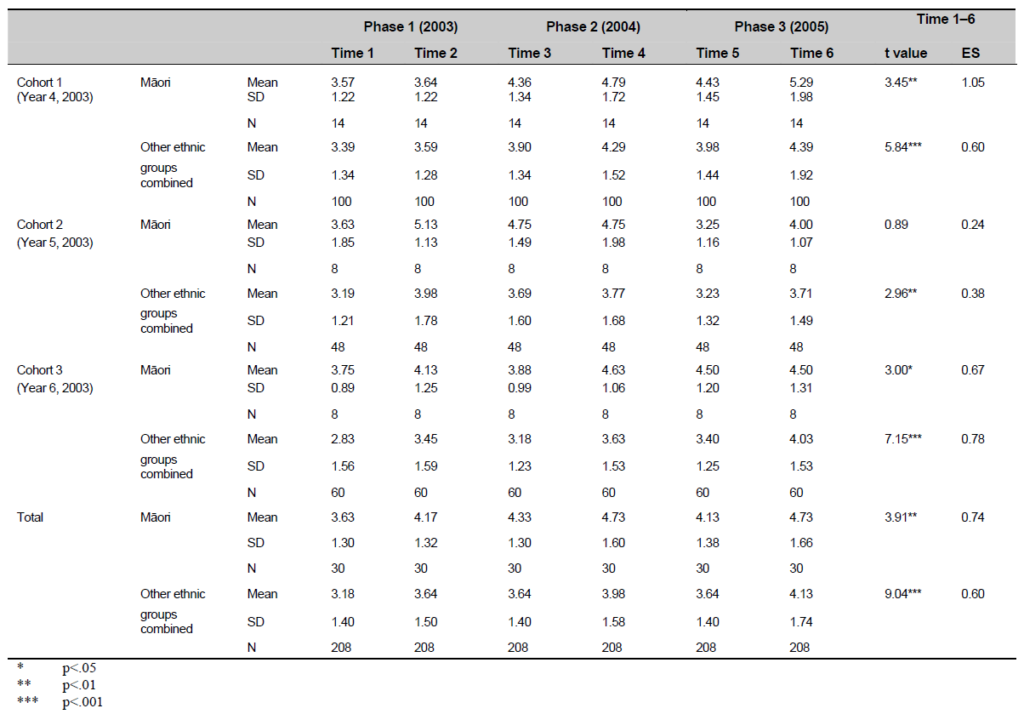

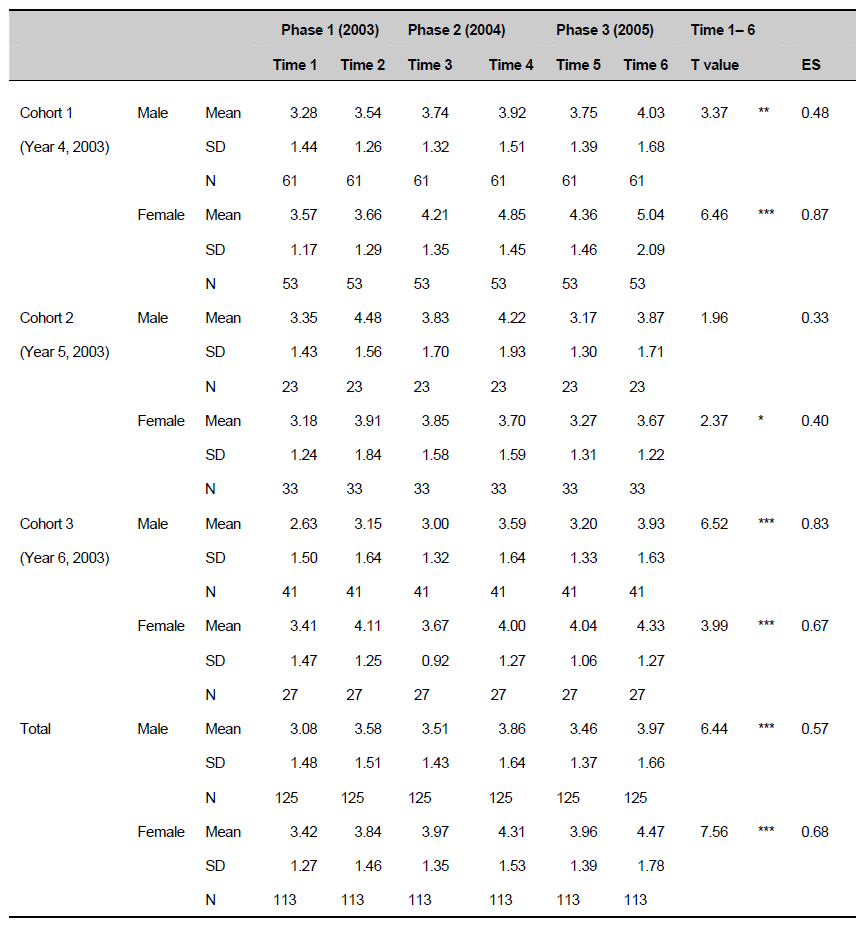

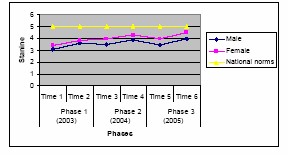

Analysis of achievement for longitudinal cohorts showed that by the end of the project, the average student now scored in the average band of achievement (stanine 4.21). The overall effect size for gains in stanines was 0.62. Māori students’ achievement accelerated at similar rates to those of the other ethnic groups participating in the project, so that by the end of the project, the average Māori student scored within the average band (mean =4.73), with one cohort of Māori students (Year 4) scoring above the national expected average at stanine 5.29. Males and females made similar rates of progress over the three years in the intervention, but female students, on average, started with higher levels of achievement than male students. On average, students in each school made accelerated gains in achievement from the beginning to the end of the project.

Analyses using the design format showed that after two years and after three years, students had statistically significantly higher achievement than baseline comparison groups (effect sizes ranged between 0.31 to 0.59), and were achieving statistically significantly higher than a comparison cluster of schools (effect sizes ranged between 0.33 and 0.61.)

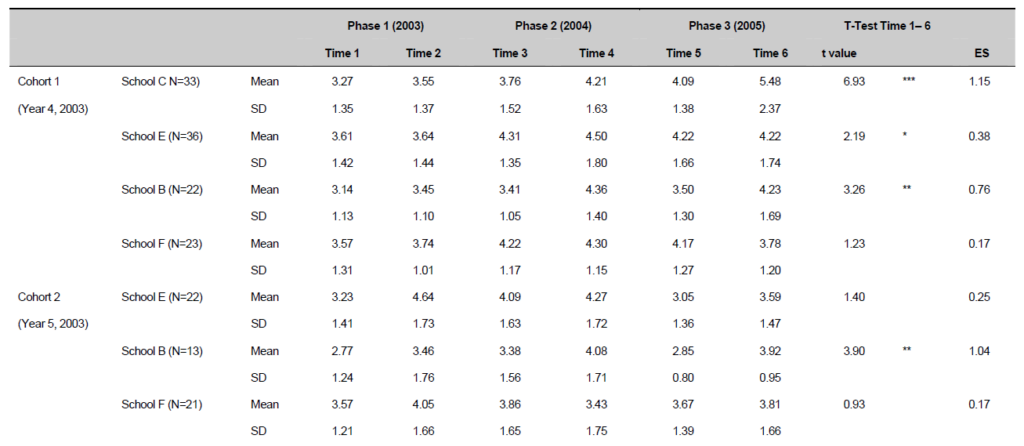

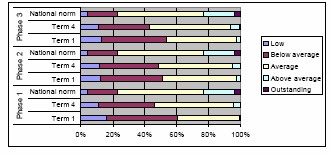

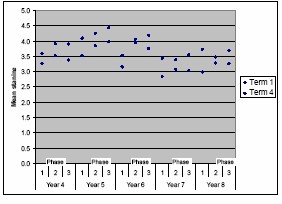

When total school populations were analysed (which included new students entering and students leaving), a similar picture to that of the previous analyses emerged. The overall level of achievement showed a variable but increasing trend over time, so that by the end of the intervention, the average stanine for 1700 students at 7 schools was 3.61. A range of gains were made between schools and within schools across the three phases. Several factors were suggested as contributing to these differences in gains, including degree of participation by schools and teachers, and aspects of curriculum planning.

Observations of classroom instruction were carried out systematically in both the first and the second years. Significant changes in types of teacher and student exchanges relating to the focus of the intervention were linked to the pattern of the gains over two years in the component tests. Further case studies of teachers showed that a high gain teacher more often directed students’ awareness to the requirements of activities, clarified her high expectations, pushed her students with complex tasks, introduced more complex and less familiar language including idiomatic uses, created a classroom community that enjoyed the use and study of oral and written language, exposed students regularly to rich and varied texts, and was able to incorporate student cultural and linguistic resources, as well as clarifying areas of confusion.

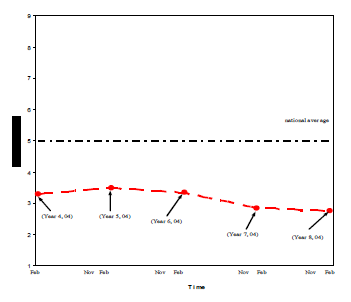

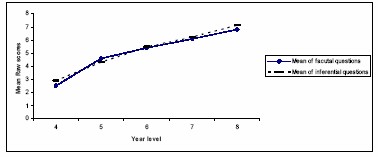

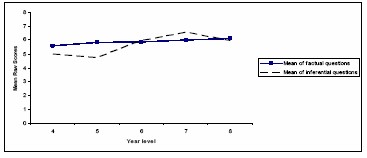

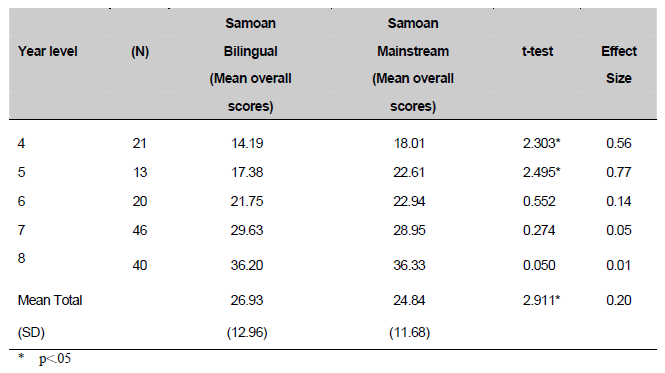

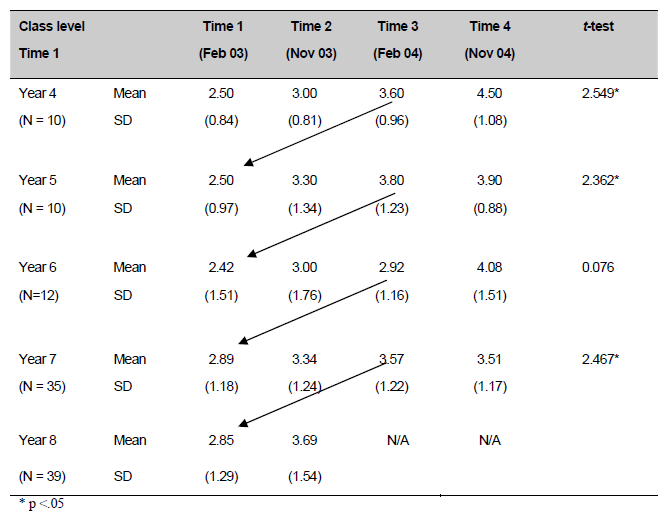

The analyses of students in Samoan bilingual classrooms showed that the programme was effective in those classes too. Gains by students in the bilingual classrooms were at least as high as the gains by Samoan students in the mainstream classrooms, and in three of the year levels, they were noticeably higher. Students in bilingual classrooms were significantly lower in English reading achievement in Year 4 and Year 5, but from Year 6 onwards, their achievement levels in English were similar. Overall, cohorts made 1.13 stanine gain in two years; for four cohorts, this was a higher rate of gain than for Samoan students in mainstream classes. Gains in these classrooms could also be linked with the degree of participation by schools and teachers.

We concluded that it is possible to develop more effective teaching that impacts directly on the reading comprehension achievement of Year 4–9 children. The level of gains overall were in the order of one year’s gain in addition to nationally expected progress over three years. When these gains are considered in terms of the history of schooling in South Auckland, the educational significance of the gains, and the international literature of schooling improvement, they are seen to be substantial. Even when results for all the students present from the beginning to the end are considered, including those who subsequently left and those who subsequently entered the school, either from earlier levels or as new students from other schools, the levels of achievement at the schools have increased considerably. Given the quasi-experimental design with its additional strengths, these gains can be attributed with some confidence to the effects of the three phase model adopted by the research and development programme.

The analyses suggest that thinking about and critically discussing the evidence at a classroom, school and cluster level led to a significant part of the overall gains in achievement and that the professional learning communities had the capacity to use the evidence to make changes to existing practices. This is likely to be dependent on external support, in the form of collaborative research-practice-policy partnerships (e.g., Robinson & Lai, 2006). We need to consider how to foster such partnerships, in terms of both the kinds of partnerships being developed, and the infrastructure to support the development and sustainability of such partnerships.

The analyses of instruction show that specific aspects of instruction changed, including the focus on checking and detecting threats to gaining meaning in texts and boosting vocabulary acquisition, consistent with the focus of the programme and consistent with the gains that were made. But they indicated the need for caution in making assumptions about instructional and learning needs from the existing literature alone. They also indicated that effective instruction needs to be designed to fit the context-specific needs created by past histories of schooling and contemporary profiles. Interestingly, gains on the decoding test also increased to about the same degree as gains in other areas, despite not being a direct target of the intervention.

The educational intervention also impacted on Samoan students’ achievement in bilingual classrooms, demonstrating that Samoan students in bilingual classes can develop literacy in English to levels similar to those of other Samoan students who are not in bilingual classes. The evidence also shows that developmental changes in English comprehension come to reach mainstream levels by around Year 6, but that this rate of change may be modifiable too. It is important to see these results in a wider developmental and educational context, involving bilingual and biliteracy development in these classes.

1. Introduction

Yesterday was too late?

In 1981, Peter Ramsay (Ramsey, Snedden, Grenfell & Ford, 1981) and his colleagues at the University of Waikato completed a study of the schools in South Auckland. The title of their report was Tomorrow may be too late. They argued that there was an impending crisis created by “educational disadvantage suffered by most school-aged students in Mangere and Otara” who were “achieving well below their chronological age” (p. 41). They concluded with “a plea for urgency as the needs of the children of Mangere and Otara are very pressing. Tomorrow may be too late!” (p. v).

The gap in achievement between Mäori and non-Mäori children in mainstream schools is not a recent phenomenon. Earlier reports, such as the Currie (1967) and Hunn (1960) reports on education in the 1950s, had identified this difference as important and as urgently in need of a solution (see also Openshaw, Lee & Lee, 1993). The long standing on the “problem” for Mäori students is important to note, because some commentaries suggest it is relatively recent, and can be linked to changes in methods of teaching reading and writing which began in the 1960s (Awatere Huata, 2002; Nicholson, 2000).

Yet the historical picture is not entirely bleak. There is evidence that in the colonial period, there were times when Mäori children outperformed other children in some schools. Some evidence for this can be found in the Survey of Native Schools for 1930 (Education Gazette, 1 December 1930, Department of Education, 1930; see also McNaughton, 2000).

The sense of crisis that Ramsay expressed for the sake of children, communities and families is also present in reports from other countries (Snow, Burns & Griffen, 1998). The need is identified for communities who have, relative to the mainstream communities, less economic and political power, whose children are considered to be “minorities”. But there has been little evidence that the crisis is able to be solved in schools. In the United States, Borman (2005) shows that national reforms to boost the achievement of children in low performing schools serving the poorest communities have produced small gains in the short term (of the order of effect sizes of less than 0.20), but that after seven years, in those few schools that sustain reforms over a long period, the effects increase (estimated to be around effect sizes of 0.50). When considered across the country, while some achievement gains have occurred, they have typically been low and need to be accumulated over long periods of time.

At a more specific level, some studies from the United States have shown that clusters of schools serving ‘minority’ children have been able to make a substantial difference to the achievement of children. In one set of studies (Taylor, Pearson, Petersen & Rodriguez, 2005), researchers intervened in high poverty schools with carefully designed professional development research and development. They too found small cumulative gains across two years. This study and others pointed to important school level factors that must be in place in order for all children to achieve at high levels in reading. Summarising these, Taylor et al. (2005) noted six key elements: improved student learning; strong building leadership; strong staff collaboration; ongoing professional development; sharing student assessment data; and reaching out to parents. In these studies, there is evidence that achievement can be effected, and in the case of studies such as Taylor et al., that small gains over two years could be attributed to these characteristics.

The days after Ramsay’s tomorrow

Where does such offshore evidence leave the schools of South Auckland, which, according to Ramsay, had already received substantial additional resources by the early 1980s? There is little evidence that Ramsay’s concern led to immediate changes. The evidence from both national and international comparisons suggests that by the beginning of the 1990s, the children in decile 1 schools, and more generally children who were Mäori and Pasifika, were still not achieving as well as non-Mäori and non-Pasifika children in reading comprehension. The reading comprehension comparisons across 32 countries in the International Association for Evaluation of Educational Achievement study (see http://www.iea.nl/reading_literacy.html) provided stark evidence of what came to be called a “long tail” in the distribution of achievement. The problem was that while in general New Zealand continued to have high average achievement, and the best students in New Zealand were superior to other students in the world, Mäori and Pasifika children were over-represented in the “long tail” (Elley, 1992; Wagemaker, 1992).

In New Zealand, the recognition of the distribution problem, as well as other research developments, has had an effect. Reports by a Literacy Task Force (1999) and a Literacy Experts Group (1999) contributed to a national policy shift, which was implemented in the National Literacy and Numeracy strategy. The policy shift promoted concerted professional development and research practice development which was focused on Years 1–4 and Mäori and Pasifika children, especially those in decile 1 schools.

Associated with this policy and practice shift, there is now evidence from the national educational monitoring project (NEMP) and renorming exercises that changes in the targeted areas have occurred (Elley, 2005). The news is positive for the early stages of literacy instruction. From NEMP, the one area in literacy achievement where there are clear changes is in reading decoding, both accuracy and fluency (Flockton, & Crooks, 2001). Their second cycle of assessments of reading showed that the percentages of children reading below age level in reading accuracy at Year 4 had reduced markedly from 1996 to 2000, from around 20 percent to around 13 percent.

Little improvement occurred for Year 8 children in oral reading (Flockton & Crooks, 2001). A recent renorming of standardised assessments at Year 1 (6 years) conducted in 2000 also suggests that knowledge of letters and sounds has improved (Clay, 2002).

These increases in oral reading accuracy were found to have been maintained in the third (2004) cycle of assessments at Year 4. Further notable increases in accuracy were found for the Year 8 children, with only around 11 percent at both year levels now reading below age level (Crooks & Flockton, 2005). The breakdown of gains in 2000 and 2004 suggest that reading accuracy had improved at similar rates at Year 4 for both Mäori and Pakeha children (Flockton, 2003). But by 2004, the analyses showed substantial reduction at Year 4 in the gap between Pakeha and Mäori students (see further comment nemp.otago.ac.nz/forum_comment/2004).

Research based interventions using experimental designs have shown that the gaps at this early stage can be reduced considerably. We also know many of the characteristics of effective teaching at that early stage. For example, in the “Picking up the Pace” research with Mäori and Pasifika children in decile 1 schools in Mangere and Otara, their typical achievement was two stanines[1] below average levels in areas of decoding after a year at school (Phillips, McNaughton, & MacDonald, 2004). A research based intervention used professional development with teachers and teacher leaders to increase effectiveness in areas of reading and writing, including specific phonics instruction. Where teaching approaches were fine-tuned to solve children’s confusions and to make the purpose of classroom activities more obvious, and higher expectations about achievement were developed through evidence based analyses of progress, the children’s achievement was raised to close to the national distribution (see Phillips, McNaughton, & MacDonald, 2004). In some areas, such as alphabet knowledge, their progress was as good as or better than typical progress; in others, e.g. progress through text levels, they closely approximated typical progress; but in one area, generalized word recognition, they were still noticeably below average levels.

“Tomorrow” is still the same for reading comprehension

These indicators of progress are cause for some celebration, given the urgency signalled in Ramsay’s report, and the seemingly intractable nature of the teaching difficulty over decades. But the news has not all been good. For reading comprehension, little appeared to have changed for Mäori and Pasifika children in low decile schools over the period in which the decoding changes occurred, as we will show below. The NEMP data indicate increases in levels of comprehension in Year 4 from 1996–2000, but the breakdown of the achievement patterns suggests a substantially wider disparity between Mäori and non-Mäori in comprehension both at Year 4 and at Year 8. Furthermore, for children in low decile schools, gaps in comprehension increased both at Year 4 and at Year 8 (Flockton, 2003).

In the third cycle of assessments in 2004, the gains in oral reading accuracy were not matched by similar gains in reading comprehension for the total group of students at either Year 4 or Year 8. The detailed comparisons suggest that the gaps in oral reading accuracy between Mäori and Pasifika students and Pakeha students which had closed between 1996 and 2000 reduced further in 2006. But this was not matched in comprehension (Crooks & Flockton, 2005). Commentaries on this 2004 report note that Mäori children performed well in decoding, but there were large differences in favour of Pakeha in aspects of comprehension (nemp.otago.ac/forum_comment/2004). These differences were apparent for Pasifika children too, and they were apparent for decile 1-3 schools when compared with other decile groups (Crooks & Flockton, 2005).

This is true also of at least some of the schools in South Auckland. When we completed what we describe further in this report as a baseline profile of a cluster of schools in Mangere, we found that across schools and year levels, achievement in reading comprehension was relatively flat at around stanine 3, and something like two years below what would be expected as average progress nationally (Lai, McNaughton, MacDonald, & Farry, 2004). We have since repeated this finding with a cluster of Otara schools. They too were, on average, around stanine 3 across year levels and across schools (Lai, McNaughton, MacDonald, Amituanai-Toloa & Farry, 2006).

What we now know is that even if we achieve a dramatic change in teaching early reading, it does not necessarily mean that the gap reduces further on up the system. Experimental demonstrations specifically targeting the teaching of phonics also tend to show very limited transfer to comprehension (Paris, 2005). Recent national data from the AsTTle project across multiple dimensions of reading comprehension confirm the NEMP picture of large differences between Mäori and Pasifika children which are stable across decile levels, despite significant trends of higher achievement from lower to higher decile level schools (Hattie, 2002).

These comparisons need to be treated with an important qualification. The broad description of these disparities can mask important aspects of the literacy development and achievement of children in so-called “minority” groups. The conventional indicators of school literacy represent some of what children learn about literacy. But children who are in communities which have low employment, low incomes, and minority cultural and language status have engaged in a range of literacy and language activities, some of which might be quite different from mainstream children. Their knowledge, therefore, may not be well represented in tests of conventional literacy practices, especially at the beginning of schooling (McNaughton, 1999; Snow, Burns, & Griffen, 1998) and as they move into the middle school levels.

Here, it is important to note that there is an urgent challenge which has strategic importance to all of New Zealand. Students need greater ranges and levels of knowledge and skills for post secondary school study and for employment. Education is increasingly important to the success of both individuals and nations (Darling-Hammond & Bransford, 2005). Over-representation of particular groups in low achievement bands is not acceptable at individual, community or national levels, no matter what the proportion of the population. It is a pressing matter of cultural, political, constitutional (Treaty of Waitangi), ethical, economic and educational significance that we develop more effective forms of instruction for these students. It is worth noting that by 2021, Mäori children will comprise 28 percent and Pasifika children 11 percent of all the under-15-yearolds in New Zealand (Statistics New Zealand, 2002). In Mangere and Otara schools, children from these communities already make up over 90 percent of many school rolls.

There is an additional dimension to that challenge. Many of the children in the South Auckland schools have a language other than English as their home language. Yet language development for these children is not well understood. In the context of bilingual instruction, for example, and the relationships between development in two languages and two systems of literacy, little is known about biliteracy development and relationships with literacy and literacy instruction (Sweet & Snow, 2003).

The research we report here has two foci. There is a main study of seven schools, their teachers and their students. Within this, there is a second study specifically of teachers and students in Samoan bilingual classrooms. This is the first time, to our knowledge, that there has been a specific research based intervention with Samoan students in bilingual programmes which looks at their achievement.

Twenty-five years after the Ramsay report, we can report in this study important gains in reading comprehension in Year 4–9 students in decile 1 schools in Mangere. This report describes the science of these changes and documents the research and development programme that have taken place. However, the science is closely bound up with a policy context of associated changes in practices. It is likely that without the policy context, the science involved in developing more effective instruction would have achieved less. The results reported here need to be considered with this policy context in mind (Annan & Robinson, 2005).

In addition, the research is located in a particular historical context of school-based interventions. One is the landmark study “Picking up the Pace” (Phillips, McNaughton & MacDonald, 2001). As noted above, this focused on instruction in the first year, and set out to examine the separate and combined effects on children’s achievement of providing co-ordinated professional development to teachers in early childhood settings, and to teachers of children in their first year of schooling. Since the success of that project, which was completed in 2001, further professional development for Year 1 teachers has occurred, based on the practices identified in the research, and the programme in some schools has been extended through to Year 3.

That study and its further development were part of a much broader project initiative, “Strengthening Education in Mangere and Otara” (SEMO), which aimed to raise the achievement levels of children in these two areas. SEMO’s general aim was to strengthen schools in the area and to enhance children’s learning opportunities, particularly in literacy, by enhancing the work of early childhood and primary teachers who were providing literacy programmes. SEMO was succeeded by a further policy and practice development in Mangere and Otara, “Analysis and Use of Student Achievement Data” (AUSAD). This project is located within that government-funded school improvement initiative. The goal of AUSAD is to offer high quality learning environments to raise achievement. This is done by using student achievement information to inquire into the nature of the under-achievement, to test competing explanations of its cause, and to monitor the impact of teachers’ decisions about how to intervene. In short, the focus is on developing the inquiry skills of teachers to improve school practices and student learning outcomes. The initiative comprises a number of interventions focusing on improving literacy and numeracy achievement (e.g., the “Third Chance” programme aimed at improving literacy in Years 1–3).

Reading comprehension

Recent commentaries identify a major theoretical challenge facing literacy instruction. Now that some of the pressing issues in beginning reading instruction (but by no means all) have been resolved, the challenge concerns the teaching of reading comprehension. Higher levels of reading comprehension and related areas of critical thinking are central to the purposes of contemporary schooling, and are part of the education priorities and key competencies that have been set for New Zealand education (Ministry of Education, 2005). But there is a critical need for research into instruction that enhances comprehension, and into interventions that enable schools to teach comprehension effectively. The most recent reviews of relationships between research and practice note that overall evidence of teacher effectiveness is limited, and that research has not impacted greatly on effective comprehension instruction (see Block & Pressley, 2002). Similarly, the RAND reading study group, which was set up in 1999 by the US Department of Education’s Office of Educational Research and Improvement to identify the most pressing needs for research in teaching reading, has concluded:

We have made enormous progress over the last 25 years in understanding how to teach aspects of reading. We know about the role of phonological awareness in cracking the alphabetic code, the value of explicit instruction in sound–letter relationships, and the importance of reading practice in producing fluency.… The fruits of that progress will be lost unless we also attend to issues of comprehension. Comprehension is, after all, the point of reading. (Sweet & Snow, 2003, p. xii)

The challenges to teaching effectively have been identified (Pressley, 2002; Sweet & Snow, 2003). One is the need to build on the gains made in research about instructional practices for beginning literacy. A second is to do with knowledge transfer, a failure to turn all that we know about comprehension and comprehension instruction into generally more effective teaching.

These needs are particularly significant for schools serving culturally and linguistically diverse populations in low income areas (Garcia, 2003).

As noted above, on average, students in the middle years of school in New Zealand have high levels of reading comprehension, judged by international comparisons; however, there are large disparities within the distribution of achievement. These are between children from both Mäori and Pasifika communities in urban schools with the lowest employment and income levels, and other children (Alton-Lee, 2004). These findings highlight the need for instructional approaches that enable teachers to develop, use and sustain effective teaching of reading comprehension with culturally and linguistically diverse students. For Pressley (2002), this challenge represents an application problem. We know a lot about what students need to be able to do, which includes such things as regulating strategy use, and we know a lot about specific instructional effects, such as the need for explicit strategy instruction. What he claims we have failed to do is translate that knowledge into widespread usage with known effects. While Sweet and Snow echo this claim in their RAND summary of reading comprehension instruction, they also argue that there is yet more to be known about specific teaching and learning relationships, especially in the context of diverse readers, diverse text types and diverse instructional contexts (Sweet & Snow, 2003).

Generally, there is considerable consensus around what students need to learn, and what effective teaching looks like. In order to comprehend written text, a reader needs to be able to decode accurately and fluently, and to have a wide and appropriate vocabulary, as well as appropriate and expanding topic and world knowledge, active comprehension strategies, and active monitoring and fix up strategies (Block & Pressley, 2002; Pressley, 2002). So it follows that children who are making relatively low progress may have difficulties in one or more of these areas. The consensus around teaching effectively identifies attributes of both content (curriculum) and process (Taylor et al., 2005). For the middle grades, these include instructional processes in which goals are made clear, and which involve both coaching and inquiry styles that engage students in higher level thinking skills. Effective instruction also provides direct and explicit instruction for skills and strategies for comprehension. Effective teaching actively engages students in a great deal of actual reading and writing, and instructs in ways which enable expertise to be generalisable and through which students come to be able to self regulate independently.

In addition, researchers have also identified the teacher’s role in building students’ sense of self efficacy and, more generally, motivation (Guthrie & Wigfield, 2000). Quantitative and qualitative aspects of teaching convey expectations about students’ ability which affect their levels of engagement and sense of being in control. These include such things as text selection. Culturally and linguistically diverse students seem to be especially likely to encounter teaching which conveys low expectations (Dyson, 1999). There are a number of studies in schooling improvement which have shown how these can be changed. In general, changes to beliefs about students and more evidence based decisions about instruction are both implicated, often in the context of school wide or even cluster wide initiatives (Bishop, 2004; Phillips et. al., 2004; Taylor et. al., 2005).

Just as with the components of reading comprehension, it follows that low progress could be associated with teaching needs in one or more of these areas. Out of this array of teaching and learning needs, those for students and teachers in any particular instructional context will have a context specific profile. While our research-based knowledge shows that there are well established relationships, the patterns of these relationships in specific contexts may vary. A simple example might be whether the groups of students who make relatively low progress in a particular context, such as a cluster of similar schools serving similar communities, have difficulties associated with decoding, or with use of strategies, or both, and how the teaching that occurs in those schools is related to those difficulties.

Several hypotheses are possible for the low levels of reading comprehension which are tested in the following research. One is that children’s comprehension levels are low because of low levels of accurate and fluent decoding (Tan & Nicholson, 1997). A second is that children may have learned a limited set of strategies; for example, they may be able to recall well, but are weaker in more complex strategies for drawing inferences, synthesising and evaluation; or they may not have been taught well enough to control and regulate the use of strategies (Pressley, 2002). Other possible contributing reasons might be more to do with language: that is, children’s vocabulary may be insufficient for the texts used in classroom tasks (Biemiller, 1999); or they may be less familiar with text genres. Well known patterns of “Matthew effects” may be present in classrooms, where culturally and linguistically diverse children receive more fragmented instruction focused on decoding or relatively simple forms of comprehending, or receive relatively less dense instruction, all of which compounds low progress (McNaughton, 2002; Stanovich, West, Cunningham, Cipielewski, & Siddiqui, 1996). There is also a set of possible hypotheses around whether the texts, instructional activities and the pedagogy of the classroom enable cultural and linguistic expertise to be incorporated into and built on in classrooms (Lee, 2000; McNaughton, 2002). But each of these needs to be checked against the patterns of instruction in the classrooms in order for the relationships to be tested.

This approach, which focuses on the need to understand specific profiles, has an implication for meeting the challenges posed by Pressley (2002) and Sweet and Snow (2003). Rather than test in an ad hoc way the significance of certain teaching and learning relationships, what we did in the study was to test a package of targeted relationships. These are relationships initially identified through a process of profiling both learning needs and patterns of existing instruction. The analysis is aimed at adding further to our research-based knowledge of relationships between teaching and learning in specific contexts, and thereby contributing to the research and application challenges signalled by Pressley (2002) and Sweet and Snow (2003).

We assume in this profiling that while much is known, there are still some areas where we need more knowledge and analysis. This need is pressing in the context of cultural and linguistic diversity. An example in our contexts is the role of activation and deployment of background knowledge. A theoretical argument is often made that instruction needs to incorporate more of the cultural and linguistic resources that minority children bring to classrooms (McNaughton, 2002). But complementing this is another argument: that students need to develop more awareness of the requirements of classroom activities, including the relationships between current resources and classroom activities (McNaughton, 2002). While the general hypothesis of the significance of background knowledge is well demonstrated in controlled studies of reading comprehension (Pressley, 2002), the particular idea of teachers being able to incorporate this, and balancing it with enhancing awareness of classroom requirements, has not been well tested.

In the following study we draw on known properties of effective comprehension and on known relationships between types of instruction and learning outcomes. But we apply this knowledge in an intervention context. Within that context, we test the significance of the assumed relationships and features of teaching and learning. Because the context includes substantial numbers of children for whom English is a second language and who come from diverse cultural backgrounds, this is also a context for discovering new learning needs and new relationships between teaching and learning.

Professional learning communities and critical analysis of evidence

A previous study, focused on literacy achievement over the transition to school, demonstrated substantial gains across a cluster of 12 decile 1 urban schools with primarily Mäori and Pasifika students (Phillips, McNaughton & MacDonald, 2001). Among other things, the programme involved intensive collection and analysis of achievement data within schools and across a group of schools. Instructional approaches were modified to impact more strongly on increasing student engagement and teaching effectiveness around agreed goals. Team leaders within schools led professional communities. While the initial development took place within schools over six months, the programme has now been in place in schools for several years. Follow-up research has indicated that those schools which maintained and built on these processes through a professional learning community focused on teaching and learning have increased student achievement over time (Timperley, Phillips & Wiseman, 2003).

The features of these learning communities appear similar to those described by Newman, Smith, Allensworth and Bryk (2001). They identify high “instructional programme coherence”, a necessary condition for improvements in student achievement that are more likely to be sustained over time. These authors define high instruction coherence as “a set of interrelated programmes for students and staff that are guided by a common framework for curriculum, instruction, assessment, and learning climate and that are pursued over a sustained period” (p. 229). The elements suggested which are crucial to high instructional programme coherence can be identified in the Phillips, McNaughton and MacDonald (2004) programme. They include a common instructional framework for teaching literacy across all schools involved in the programme; teachers working together to implement the common programme over a sustained period of time; and assessments which are common across time. Both the New Zealand programme and the high programme coherence schools in the USA rely on long-term partnerships between schools and external support organisations, the development of a common framework for literacy diagnosis which every teacher has to implement, expected collaboration between teachers, joint decisionmaking around assessments to use, and similar factors.

Underlying many of the features of schools with high programme coherence is the use of evidence to guide and evaluate teaching practices. For example, the aim of AUSAD was for practitioners to use student achievement data to inform practice. This has led directly to planning how to design classroom programmes that specifically meet the needs of students in these diverse urban schools. The partnership has responded to the increasing calls for greater understanding of the teaching and learning of comprehension to inform practice in New Zealand (e.g. Literacy Task Force, 1999; Learning Media, 2003) and internationally (Pressley, 2002).

Similarly, critical analysis of student data is identified as significant in school and teaching effectiveness research (e.g. Hawley & Valli, 1999; Robinson & Lai, 2006). In their literature review on effective professional development, Hawley and Valli (1999) identify critical analysis as a more effective form of professional development than traditional workshop models. The collection, analysis and discussion of evidence was present in the schools maintaining gains in the Phillips et al. (2004) programme (Timperley et al., 2003).

A general question that arises is how much the critical analysis process contributes to the student changes in successful programmes. In the research and development programme reported here, the question concerns its contribution to the development of more effective teaching of reading comprehension in schools serving culturally and linguistically diverse students in low income communities. The collection, analysis and discussion process took place in the context of collective analytic and problem solving skills, where teachers collaborated with researchers and professional developers to co-construct the professional development. It is important to note here our assumption that professional expertise was distributed within and across schools, and that teachers would be able to contribute as co-participants in a research-based collaboration (McNaughton, 2002). The issue of how teachers are viewed is particularly salient in the New Zealand context, as recent research syntheses show that school effects are consistently smaller than teacher/class level effects. These latter effects can account for up to 60 percent of the variance in student achievement, depending on the subject areas, level of schooling and outcome of interest, as estimated by Alton-Lee (2004).

This sort of collective problem solving represents one way of balancing two tensions identified in effective educational interventions (Coburn, 2003; Newman et al., 2001). One tension is around the issue of guaranteeing fidelity by adhering to a set of instructional procedures used in wellresearched interventions, versus developing procedures which are derived from context specific problem solving, but may have a less well known research intervention base. A related tension is between importing a set of procedures, in a way which risks undermining local autonomy and efficacy, and a more collaborative development of common procedures, which risks losing instructional coherence. It seems to us that it is possible to construct fidelity to a common programme which has been strongly contextualised by developing a highly focused collaborative context. There is research evidence that suggests approaches in which professional development focuses on joint problem solving around agreed evidence, such as student achievement outcomes, is more likely than predetermined programmes to result in sustainable improvements in student achievement, particularly in reading comprehension (Coburn, 2003; Hawley & Valli, 1999; Timperley, Phillips & Wiseman, 2003).

Evidence is critical to the processes of developing a professional learning community capable of solving the instructional problems associated with more effective teaching. Systematic assessment for formative and diagnostic purposes is essential in order to avoid the problems we have found before, where educators assume that children need a particular programme or approach, but close inspection of the children’s profiles shows that they already have the skills targeted in those approaches (McNaughton, Phillips, & MacDonald, 2003). The significance of collecting and analysing data, rather than making assumptions about what children need (and what instruction should look like), was recently underscored by Buly and Valencia (2002). Policy makers in the State of Washington had mandated programmes without actually analysing profiles of low progress students, identified by test scores from fourth grade National Assessment of Educational Progress (NAEP) scores. The assumption underlying policies and interventions was that poor performance reflected students’ difficulties with more basic decoding abilities. Yet there was little data about this assumption, and little evidence to show that focusing on such skills would improve comprehension at fourth grade. Using a broad band of measures, Buly and Valencia identified five groups of low progress readers, some of whom did indeed have limited fluency and accuracy in decoding. However, mandating phonics instruction for all students who fell below the proficiency levels had missed the needs of the majority of students, whose decoding was strong, but who struggled with comprehension or language requirements for the tests. This finding highlights the need for research-based applications of best practice, based on analyses of student needs. One particular need that has been identified in other countries is for more effective teaching of reading comprehension than has typically been the case (Sweet & Snow, 2003).

The issue of sustainability

Developmental sustainability

A major challenge has been created by the advances made in schooling improvement and increasing instructional effectiveness through professional development. This is the issue of sustainability (Coburn, 2003). For the following research and development programme, sustainability has two meanings. The immediate concern facing the schools in South Auckland has been the need to build further progress in literacy, adding to the more effective instruction in the early years. This inevitably means considering the quality of the teaching and learning of comprehension (Sweet & Snow, 2003). The issue in the decile 1 schools is that the subsequent instructional conditions set channels for further development, and if the channels are constructed for relatively ‘low’ gradients of progress, this creates a need for further intervention. Unfortunately, as we have already noted and describe further below, the available evidence shows that despite the gains in decoding, there were still wide and possibly increasing disparities in achievement on comprehension tasks for Mäori and Pasifika children, particularly in low decile schools (Flockton & Crooks, 2001; Hattie, 2002; Lai, McNaughton, MacDonald & Farry, 2004).

The reason for needing to deliberately build this sustainability resides in the developmental relationships between decoding and comprehension. Logically, there are relationships such as the one identified by Tan and Nicholson, (1997), who showed that poor decoding was associated with poor comprehension. It makes perfect sense, that if you can’t get the words off the page, you can’t comprehend. The problem is that the corollary doesn’t apply—decoding may be a necessary condition, but it is not a sufficient condition. So being a better decoder does not automatically make you a better comprehender.

The developmental reason for this can be found in Paris’s (2005) multiple components model of literacy development, or Whitehurst and Lonigan’s (2001) ‘inside outside’ model of the strands of literacy development. Each of these explains that there are different developmental patterns associated with acquisition for components such as items, and for language meaning and uses, and they are somewhat independent. This accounts for the phenomenon of rapid, accurate decoders who are not able to comprehend, which is described by professional educators and researchers (McNaughton, Lai, MacDonald & Farry, 2004). There is another developmental reason. Inoculation models do not apply to most phenomena in teaching and learning; just because you know and can do some stuff this year doesn’t mean that you automatically make further gains next year. It depends at least in part on whether the teacher you meet effectively enables you to build on to and extend your learning. Fluent, accurate decoding is a necessary but not a sufficient condition for developing further comprehension skills (Block & Pressley, 2002; Sweet & Snow, 2003).

Sustainability of effective professional learning community

There is a second meaning for sustainability. We now need to know which properties of teaching practices in schools enable success to be sustained with new cohorts of students and new groups of teachers joining schools (Timperley, 2003). Although effective practices may be able to be identified, this is an additional challenge. Sustaining high quality intervention, it now seems, is dependent on the degree to which a professional learning community is able to develop (Coburn, 2003; Toole & Seashore, 2002). Such a community can effectively change teacher beliefs and practices (Annan, Lai, & Robinson, 2003; Hawley & Valli, 1999; Timperley & Robinson, 2001).

Several critical features of a collaboration between teachers and researchers are predicted to contribute to such a community developing (Coburn, 2003; Toole & Seashore, 2002; Robinson & Lai, 2006). One is the need for the community’s shared ideas, beliefs and goals to be theoretically rich. This shared knowledge is about the target domain (in this case, comprehension); but it also entails detailed understanding of the nature of teaching and learning related to that domain (Coburn, 2003). Yet a further area of belief that has emerged as very significant in the achievement of linguistically and culturally diverse students in general, and indigenous and minority children in particular, is the expectations that teachers have about children and their learning (Bishop, 2004; Delpit, 2003; Timperley, 2003).

Being theoretically rich requires consideration not only of researchers’ theories, but also of practitioners’ theories, and of adjudication between them. Robinson & Lai (2006) provide a framework by which different theories can be negotiated, using four standards of theory evaluation. These standards are accuracy (empirical claims about practice are well founded in evidence); effectiveness (theories meet the goals and values of those who hold them); coherence (competing theories from outside perspectives are considered); and improvability (theories and solutions can be adapted to meet changing needs, or to incorporate new goals, values and contextual constraints).

This means that a second feature of an effective learning community, already identified above, is that their goals and practices for an intervention are based on evidence. That evidence should draw on close descriptions of children’s learning as well as descriptions of patterns of teaching. Systematic data on both learning and teaching would need to be collected and analysed together. This assessment data would need to be broad based, in order to understand the children’s patterns of strengths and weaknesses, to provide a basis for informed decisions about teaching, and to clarify and test hypotheses about how to develop effective and sustainable practices (McNaughton, Phillips & MacDonald, 2003). This means that the evidence needs to include information about instruction and teaching practices.

However, what is also crucial is the validity of the inferences drawn, or claims made, about that evidence (Robinson & Lai, 2006). The case reported in Buly & Valencia (2002), for example, shows how inappropriate inferences drawn from the data can result in interventions that are mismatched to students’ learning needs. Robinson & Lai (2006) suggest that all inferences be treated as competing theories and evaluated.

So a further required feature is an analytic attitude to the collection and use of evidence. One part of this is that a research framework needs to be designed to show whether and how planned interventions do in fact impact on teaching and learning, enabling the community to know how effective interventions are in meeting its goals. The research framework adopted by the community needs therefore to be staged so that the effect of interventions can be determined. The design part of this is by no means simple, especially when considered in the context of recent debates about what counts as appropriate research evidence (McCall & Green, 2004; McNaughton & MacDonald, 2004).

Another part of the analytic attitude is critical reflection on practice, rather than a comfortable collaboration in which ideas are simply shared (Annan, Lai & Robinson, 2003; Ball & Cohen, 1999; Toole & Seashore, 2002). Recent New Zealand research indicates that collaborations which incorporate critical reflection have been linked to improved student achievement (Phillips et al., 2004; Timperley, 2003) and to changed teacher perceptions (Timperley & Robinson, 2001).

A final feature is that the researchers’ and teachers’ ideas and practices need to be culturally located. We mean by this that the ideas and practices that are developed and tested need to entail an understanding of children’s language and literacy practices, as these reflect children’s local and global cultural identities. Importantly, this means knowing how these practices relate (or do not relate) to classroom practices (New London Group, 1996).

The main research project

This project is a result of a three year research and development partnership between the Ministry of Education Schooling Improvement Initiative AUSAD, the seven schools in the Mangere cluster, and the Woolf Fisher Research Centre at the University of Auckland.[2] The representatives from the seven schools formed a Senior Assessment Team (SAT) to work with researchers, the Ministry of Education and the Initiative leaders on developing an intervention to raise student achievement.

The collaboration involved an innovative approach to research practice partnerships. The purpose was to determine the extent of the challenges for effective teaching of comprehension, and to create better teaching methods to meet those challenges. As part of this, a cluster wide intervention for all teachers teaching classes at Years 4–8 (and in one school, Year 9 also) in the seven schools took place. This required extensive school-based professional development, as well as systematic collection of achievement data and classroom observations within a rigorous research design. The research-based intervention was designed to test both the discrete components of effective teaching in school-wide implementation, and the model developed for a research-school practice partnership.

Embedded in the overall programme was a Samoan bilingual study. This involved two of the schools, with 177 Samoan children in six classes in Years 4–8. While the overall project’s main purpose was to enhance the comprehension achievement of all children, the Samoan bilingual study was an attempt to address how Samoan students and Samoan teachers learn and teach comprehension, given that they can speak and understand two languages (Samoan and English).

Research questions

This study: aims and research questions

This study aimed to raise the achievement in reading comprehension of students in seven Mangere schools, through a planned and sequenced research based collaboration. The study addresses several areas of strategic importance to New Zealand, as noted above.

The study also addresses specific theoretical questions. These are to do with the development of reading comprehension; effective instruction for reading comprehension; the development and role of professional learning communities; the role of (contextualised) evidence in planned interventions; and the nature of effective research collaborations with schools. The specific research questions were:

- Can a research-practice collaboration with seven decile 1 schools develop cluster-wide and school based professional learning communities that are able to critically analyse and problem solve issues of instructional effectiveness, thereby developing more effective instruction that has a powerful educationally significant impact on Mäori and Pasifika children’s comprehension at Years 4–9?

- Can a set of effective instructional activities be identified that are able to be used by teachers to enhance the teaching of comprehension for Mäori and Pasifika children in Years 5–8 in decile 1 schools?

A general hypothesis derived from these areas is that:

instructional approaches to reading comprehension present in the cluster of schools could be fine tuned to be more effective in enhancing achievement through a research practice collaboration, and the development of professional learning communities, using contextualised evidence of teaching and learning.

The research base for each of these areas is outlined in the following sections.

Samoan bilingual study

- The general aim of the Samoan bilingual study was to test the more general assumption that a major reason for lower than expected achievement for Samoan students on comprehension tests in schools was less than effective teaching.

What this report covers

This report describes the results of the research and development programme in action, as researchers and practitioners developed communities to meet the challenge of building more effective instruction for reading comprehension in linguistically and culturally diverse urban schools. The design methodology and frameworks for the interventions are described in Chapter 2. Chapter 3 describes the results of these interventions for the overall three year research and development partnership between schools and researchers. Results for the study of students in Samoan bilingual classes follows in Chapter 4. In the final chapter, results are summarised and discussed.

2. Methods

The overall partnership involved schools in the Ministry of Education Mangere Analysis and Use of Student Achievement Data (AUSAD) school improvement initiative, the initiative leaders, the Woolf Fisher Research Centre (University of Auckland) and Ministry of Education representatives.

Main study participants

Schools

The study involved seven decile 1 Mangere schools. Two of these schools are contributing schools (Year 1–Year 6); three are full primary schools (Year 1–Year 8); one is an intermediate school (Year 7–Year 8); and one is a middle school (Year 7–Year 9). The schools ranged in size from 292 students to 593 students.

Students

In the following study, we report on several overlapping groups of students. The first group consists of all the students present at the beginning of the three year study (Baseline sample). The second consists of three cohorts of students, initially from Year 4, Year 5, and Year 6, who were followed longitudinally for three years. The third group consists of all students who were present at the beginning and at the end of each year.

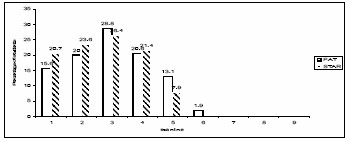

Overall baseline samples

Baseline data (February 2003) were collected from 1216 students in six of the schools (one school who joined the partnership was unable to participate in the first round of data collection) at the following levels: Year 4 (mean age 8 years, n=205); Year 5 (mean age 9 years, n=208); Year 6 (mean age 10 years, n=265); Year 7 (mean age 11 years, n=267); and Year 8 (mean age 12 years, n=271). The total group consisted of equal proportions of males and females from 14 ethnic groups. Four main groups made up 87 percent of the sample. These groups were Samoan (33%), Mäori (20%), Tongan (19%) and Cook Island (15%). Approximately half the children had a home language other than English.

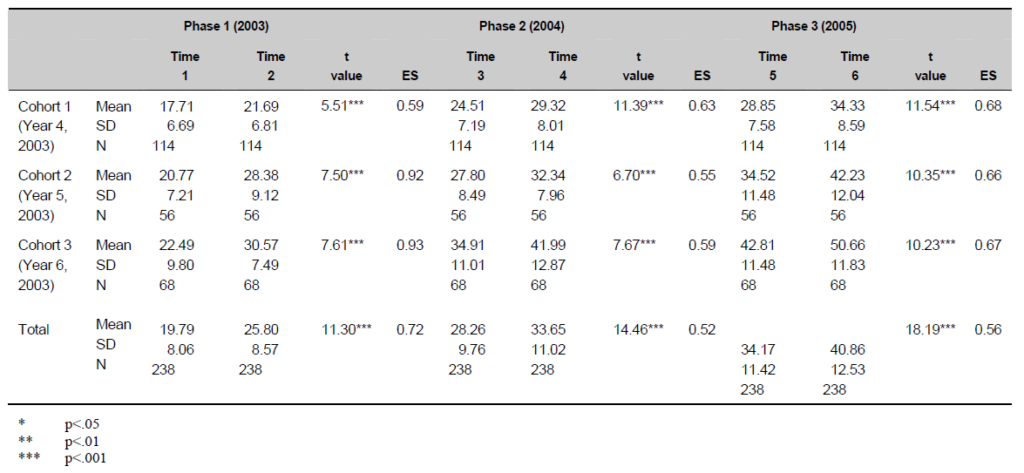

Longitudinal cohorts

Several cohorts of students were followed longitudinally from Time 1 to Time 6; these were those students who were present at all 6 time points, a total of 238 students. There were three cohorts. Cohort 1 (n=114), those students who were Year at Time 1; Cohort 2 (n=56), those students who were Year 5 at Time 1; and Cohort 3 (n=68), those students who were Year 6 at Time 1. These students were a subset of the students included in the baseline sample.

Overall group year by year

A third group of students were those present at the beginning and end of each year. In Year 1 (2003), there were n=1216; in Year 2 (2004), there were n=1683; and in Year 3, there were n= 1619. All of the students who were in the longitudinal cohort group were part of these groups, but these groups also included students who were present for only a single year, including Year 7 and Year 8 students, new Year 4 students (in the second and third year), new students arriving at the school and staying at least a year, and students who were present for a year only.

Teachers

Around 70 teachers were involved in each year of the project, including literacy leaders. Characteristics of the teachers varied somewhat from year to year, but in general, around twothirds had five or more years of experience, and 10 percent were beginning teachers. A total of 11 percent were in bilingual classes (including Samoan, Tongan and Mäori bilingual classes). In the second year, 25 of the teachers (a third) were Pasifika or Mäori.

Samoan bilingual study participants

Students

Samoan bilingual baseline samples

Within the overall study, Year 4–Year 8 students from six Samoan bilingual classrooms were involved. There were between 24 and 30 students enrolled in each of the classes across two years A cross section at baseline of students at different school years, from Year 4 (aged 8) to Year 8 (aged 13), was assessed at the beginning of the school year in 2003. This cross-sectional group provided a baseline against which cohorts in the classrooms of the teachers receiving the professional development could be compared. The six Samoan bilingual classes operated in two schools (School A and School B) that were involved in the larger project. School A had three bilingual classrooms: a Year 7 classroom (12-year-olds), a Year 7/8 composite classroom (12- and 13-year-olds), and a Year 8 classroom (13-year-olds). These classrooms were in close proximity to each other, being located in one school building. School B had three bilingual classrooms also: two composite classes: a Year 4/5 (8- and 9-year-olds), and a Year 5/6 (9- and 10-year-olds) were housed in one part of the school, and the other, a Year 7/8 classroom, was housed in another part.

Samoan bilingual longitudinal cohorts

A second group involved several longitudinal cohorts and was based on the original baseline students. From each year, students who were continuously present at each of four time points— the beginning (assessed in February) and end (assessed in November) of both the 2003 and 2004 school years—are identified and described. Cohorts at each year level, from Year 4 through to Year 8, were repeatedly measured over two years. In the second year, the Year 8 cohort moved on to secondary schools. Given the large movement into and out of the classrooms, this meant there were 140 students represented in the baseline group, but between 10 and 35 students at each age level in the longitudinal cohorts.

Samoan mainstream samples

For comparison purposes, Samoan students in mainstream classes (n = 62 classes) from all seven schools involved in the overall project were identified. These students came from the same communities, but because there were only 67 Samoan students across Time 1 and Time 4 in School A and School B, it was decided to include all Samoan students in mainstream classrooms in the wider study for comparisons. The number of mainstream students across two years ranged from 345 to 456. Two samples of students were also used. Again, a cross-sectional baseline of students from different school years, from Year 4 (aged 8) to Year 8 (aged 12) was assessed at the beginning of the school year 2003. In addition, longitudinal cohorts were identified. From each year, students who were continuously present at each of four time points—the beginning (assessed in February) and end (assessed in November) of both the 2003 and 2004 school years— were identified and described. Cohorts at each year level, from Year 4 through to Year 8, were repeatedly measured over two years. In the second year, the Year 8 cohort moved on to secondary schools. With the larger movement into and out of the classrooms, this meant that there were 345 students at Time 1 represented in the baseline group (and at subsequent times, the number of mainstream students were: 451 at Time 2; 456 at Time 3; and 422 at Time 4); but these were between 24 and 48 students at each age level in the longitudinal cohorts.

Teachers

The six teachers comprised five females (two from school A; three from school B) and one male (school A). Three teachers were originally from Samoa (Teacher 1, Teacher 2 and Teacher 6). The first two teachers had undergone teacher re-training in New Zealand, the other had not. The other three teachers were New Zealand trained (Teacher 3, Teacher 4 and Teacher 5). Half of the teachers were in the 25–35 age range, the other half in the 36–45 range. Teacher qualifications ranged from Diploma in Teaching (with one completing the Bachelor of Education degree), to Bachelor of Education (Teaching), and Bachelor of Teaching and Graduate Diploma in Teaching. Two held English for Students of Other Languages (ESOL) Diplomas.

School reading comprehension lessons

Observations were carried out as part of the intervention (see below), and they provided a general description of the programmes across phases through which the intervention was delivered. Generally the programme was similar across classes and schools, and similar to the general descriptions of the New Zealand teaching in the middle grades (Smith & Elley, 1994; Ministry of Education, 2006). A 10-15 minute whole class activity, which involved mostly introducing and sharing a text, often a narrative text, or reviewing the previous day’s work, was usually followed by a 30-40 minute guided reading session in small groups, led by the teacher using an instructional text. These included text study and analysis (such as study of plot or character in narrative texts and extracting and using information in informational texts), specific group or paired forms of instructional/guided reading (such as ‘reciprocal teaching’), and individual or group project work (such as developing taxonomies of categories introduced in science topics). Typically, the teacher worked with two groups over this time period and held conferences on the run with other groups.

Levels of engagement were generally high, with routines well established and many instances of teacher-student and student-student interactions. The general organisation meant that whole class activities occurred on 3-5 days per week and small group work with one or two groups often daily, so that each group had at least one session but up to three sessions with direct teacher guidance each week. However, the variation in frequency of contact with each group was quite marked between schools. When they were not with the teacher, groups did a range of activities. Some had developed to the point of being able to operate just with peer guidance in reciprocal teaching. In most classrooms, worksheets, sometimes related to the texts, were used; these contained questions about a text and often contained sentence, word or sub word studies.

Design

Rationale for the quasi-experimental design

At the core of the following analyses is a quasi-experimental design from which qualified judgements about possible causal relationships are made. While it has been argued that the gold standard for research into schooling improvement is a full experimental design, preferably involving randomised control and experimental groups over trials (McCall & Green, 2004), a quasi-experimental design was adopted for two major reasons. The first is the inapplicability of a randomised control group design for the particular circumstances of this project. The second is the usefulness of the quasi-experimental design format, given the applied circumstances.

Schools are open and dynamic systems. Day to day events change the properties of teaching and learning and the conditions for teaching and learning effectively. For example, in any one year teachers come and go, principals may change, the formula for funding might be altered, and new curriculum resources can be created. More directly, teachers and schools constantly share ideas, participation in professional conferences and seminars adds to the shared information, and new teachers bring new knowledge and experiences. Such inherent features of schools are compounded when the unit of analysis might be a cluster of schools who deliberately share resources, ideas and practices.

This ‘messiness’ poses tensions in a randomised experimental and control group design. On the one hand, the internal validity need is to control these sources of influence so that unknown effects do not eventuate which may bias or confound the demonstration of experimental effects. On the other hand, if schools are changed to reduce these influences so that, for example, there is no turnover in teaching staff, external validity is severely undermined because these conditions may now not be typical of schools in general.

It is of course possible to conceive of selecting sufficiently large numbers of teachers or schools to randomly assign. Then one assumes that the ‘messiness’ is distributed randomly. If the teachers and the schools in the total set are ‘the same’, then the error variance associated with this messiness is distributed evenly across experimental and control classrooms and schools. Leaving aside the challenges which large numbers of schools pose, a problem here is the assumption that we know what makes teachers and schools similar, and hence are able to be sure about the randomisation process. This is a questionable assumption to make. For example, in the current project the presence of bilingual classrooms in some schools, with different forms of bilingual provision, would create difficulties for random assignment as well as for comparability across teachers, let alone across schools. So what counts as an appropriate control is not necessarily known. There may also not be enough instances of different types of classrooms or schools even to attempt random assignment.

There is another difficulty: that of withholding treatment from the control group of schools. Just about any well resourced, planned intervention is likely to have an effect in education (Hattie, 1999). The act of deliberately withholding treatment, as required in control group designs, raises ethical concerns. Some researcher groups in the United States, also concerned for educational enhancement with schools serving poor and diverse communities, have deliberately adopted alternatives to randomised experimental and control group designs, because of ethical concerns for those settings not gaining access to the intervention (Pogrow, 1998; Taylor et al., 2001). Hattie (1999) proposed that the ethical difficulty could be overcome by comparing different interventions, thus not withholding potential benefits from any group. This is not always a workable solution, for example when the theoretical question is about the effects of a complex multi-component intervention that reformats existing teaching in a curriculum area, such as literacy instruction. Here there is no appropriate alternative intervention other than existing conditions. The American Psychological Association has detailed guidelines for conditions under which withholding treatment is justified. For example, if an intervention is shown to be effective, then it should be implemented in the control group. This route has similarities with the design proposed below.

The most damaging problem, however, is the underlying logic of experimental and control group designs. In these designs, the variation within each group (given the simple case of an experimental and a control group) is conceived as error variance and, when substantially present, is seen as problematic. The alternative design adopted below is based on a view of variability as inherent to human behaviour generally (see Sidman, 1960), and specifically present in applied settings (Risley & Wolf, 1973). It deliberately incorporates variability and the sources of the variability into the design. Questions about the characteristics and sources of variability are central to knowing about effective teaching and learning, and can be explored within the design. Such a design is more appropriate to the circumstances of building effectiveness over a period of time, given that the variability is an important property (Raudenbusch, 2005). Similarly, such designs are useful in the case of planning for sustainability with ongoing partnerships. In fact, longitudinal designs are recommended in which sources of variability are closely monitored and related to achievement data, such as levels of implementation, the establishment of professional learning communities, coherence of programme adherence, and consistency of leadership and programme focus over time (Coburn, 2003). These are all matters of concern in the research reported here.

Repeated measures of children’s achievement were collected in February 2003 (Time 1), November 2003 (Time 2), February 2004 (Time 3), November 2004 (Time 4), February 2005 (Time 5) and November 2005 (Time 6) as part of the quasi-experimental design (Phillips, McNaughton & MacDonald, 2004). One further time (February 2006) was added in the report to add to the evidence of sustained changes. The design uses single case logic within a developmental framework of cross-sectional and longitudinal data. The measures at Time 1 generated a cross section of achievement across year levels (Years 4–8), which provided a baseline forecast of what the expected trajectory of development would be if planned interventions had not occurred (Risley & Wolf, 1973). Successive stages of the intervention could then be compared with the baseline forecast. The first of these planned interventions was the analysis and discussion of data. The second was the development of instructional practices. The third was a phase in which sustainability was promoted. This design, which includes replication across cohorts, provides a high degree of both internal and external validity. The internal validity comes from the in-built testing of treatment effects described further below; the external validity comes from the systematic analysis across schools within the cluster.

The cross sectional baseline was established at Time 1 (February 2003). Students from that initial cross-section were then followed longitudinally and were re-tested at Time 2, 3, 4, 5 and 6, providing repeated measures over three school years. Two sorts of general analyses using repeated measures are possible. Analyses can be conducted within each year. These are essentially pre- and post-measures. But because they are able to be corrected for age through transformation into stanine scores (Elley, 2001), they provide an indicator of the impact of the three phases, against national distributions at similar times of the school year. However, a more robust analysis of relationships with achievement is provided using the repeated measures within the quasiexperimental design format. They show change over repeated intervals.

Good science requires replications (Sidman, 1960). In quasi experimental research, the need to systematically replicate effects and processes is heightened because of the reduced experimental control gained with the design. This need is specifically identified in discussions about alternatives to experimental randomized designs (Borko, 2004; Chatterji, 2005; Raudenbusch, 2005). For example, McCall and Green (2004) argue that in applied developmental contexts, evaluation of programme effects requires a variety of designs, including quasi-experimental, but our knowledge is dependent on systematic across site analyses. Replication across sites can add to our evaluation of programme effects, particularly when it is inappropriate or premature to conduct experimental randomized designs. Such systematic replication is also needed to determine issues of sustainability (Coburn, 2003) and scaling up (McDonald, Keesler, Kauffman, & Schneider, 2006). Coburn argues that the distribution and adoption of an innovation are significant only if its use can be sustained in original and subsequent schools.

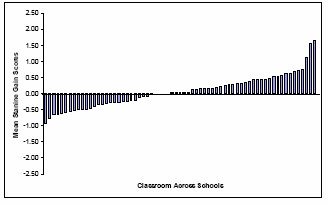

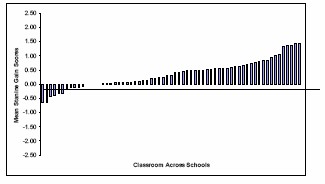

In the design used with this cluster of schools, there were in-built replications across age levels and across schools within the quasi-experimental design format. These provide a series of tests of possible causal relationships. However, there are possible competing explanations for the conclusions of the cluster wide results which are difficult to counter with the quasi-experimental design. These are the well known threats to internal validity, two of which are particularly threatening in the design adopted here.